Generative AI House and the Psychological Imagery of Alien Minds—Stephen Wolfram Writings

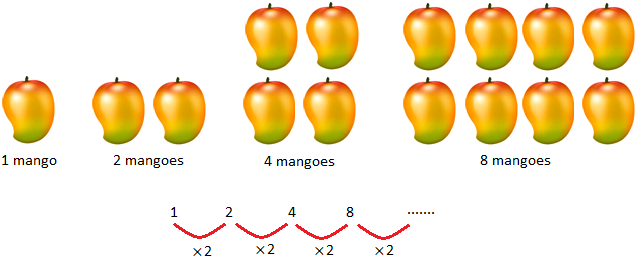

[ad_1]

AIs and Alien Minds

How do alien minds understand the world? It’s an outdated and oft-debated query in philosophy. And it now seems to even be a query that rises to prominence in reference to the idea of the ruliad that’s emerged from our Wolfram Physics Undertaking.

I’ve questioned about alien minds for a very long time—and tried all types of how to think about what it may be wish to see issues from their perspective. However previously I’ve by no means actually had a technique to construct my instinct about it. That’s, till now. So, what’s modified? It’s AI. As a result of in AI we lastly have an accessible type of alien thoughts.

We sometimes go to loads of hassle to coach our AIs to provide outcomes which are like we people would do. However what if we take a human-aligned AI, and modify it? Nicely, then we get one thing that’s in impact an alien AI—an AI aligned not with us people, however with an alien thoughts.

So how can we see what such an alien AI—or alien thoughts—is “considering”? A handy approach is to attempt to seize its “psychological imagery”: the picture it kinds in its “thoughts’s eye”. Let’s say we use a typical generative AI to go from an outline in human language—like “a cat in a celebration hat”—to a generated picture:

It’s precisely the form of picture we’d count on—which isn’t shocking, as a result of it comes from a generative AI that’s skilled to “do as we’d”. However now let’s think about taking the neural internet that implements this generative AI, and modifying its insides—say by resetting weights that seem in its neural internet.

By doing this we’re in impact going from a human-aligned neural internet to some form of “alien” one. However this “alien” neural internet will nonetheless produce some form of picture—as a result of that’s what a neural internet like this does. However what is going to the picture be? Nicely, in impact, it’s exhibiting us the psychological imagery of the “alien thoughts” related to the modified neural internet.

However what does it really appear to be? Nicely, right here’s a sequence obtained by progressively modifying the neural internet—in impact making it “progressively extra alien”:

Initially it’s nonetheless a really recognizable image of “a cat in a celebration hat”. However it quickly turns into increasingly more alien: the psychological picture in impact diverges farther from the human one—till it not “appears like a cat”, and in the long run appears, a minimum of to us, quite random.

There are a lot of particulars of how this works that we’ll be discussing beneath. However what’s vital is that—by finding out the results of fixing the neural internet—we now have a scientific “experimental” platform for probing a minimum of one form of “alien thoughts”. We will consider what we’re doing as a form of “synthetic neuroscience”, probing not precise human brains, however neural internet analogs of them.

And we’ll see many parallels to neuroscience experiments. For instance, we’ll usually be “knocking out” explicit components of our “neural internet mind”, somewhat like how accidents equivalent to strokes can knock out components of a human mind. However we all know that when a human mind suffers a stroke, this may result in phenomena like “hemispatial neglect”, by which a stroke sufferer requested to attract a clock will find yourself drawing only one facet of the clock—somewhat like the images of cats “degrade” when components of the “neural internet mind” are knocked out.

After all, there are a lot of variations between actual brains and synthetic neural nets. However a lot of the core phenomena we’ll observe right here appear sturdy and elementary sufficient that we will count on them to span very totally different sorts of “brains”—human, synthetic and alien. And the result’s that we will start to construct up instinct about what the worlds of various—and alien—minds might be like.

Producing Photographs with AIs

How does an AI handle to create an image, say of a cat in a celebration hat? Nicely, the AI must be skilled on “what makes an affordable image”—and the right way to decide what an image is of. Then in some sense what the AI does is to start out producing “affordable” footage at random, in impact regularly checking what the image it’s producing appears to be “of”, and tweaking it to information it in the direction of being an image of what one needs.

So what counts as a “affordable image”? If one appears at billions of images—say on the internet—there are many regularities. For instance, the pixels aren’t random; close by ones are normally extremely correlated. If there’s a face, it’s normally kind of symmetrical. It’s extra frequent to have blue on the prime of an image, and inexperienced on the backside. And so forth. And the vital technological level is that it seems to be attainable to make use of a neural community to seize regularities in photos, and to generate random photos that exhibit them.

Listed below are some examples of “random photos” generated on this approach:

And the concept is that these photos—whereas every is “random” in its specifics—will generally comply with the “statistics” of the billions of photos from the online on which the neural community has been “skilled”. We’ll be speaking extra about photos like these later. However for now suffice it to say that whereas some could appear to be summary patterns, others appear to include issues like landscapes, human kinds, and so on. And what’s notable is that none simply appear to be “random arrays of pixels”; all of them present some form of “construction”. And, sure, on condition that they’ve been skilled from footage on the internet, it’s not too shocking that the “construction” typically contains issues like human kinds.

However, OK, let’s say we particularly need a image of a cat in a celebration hat. From all the nearly infinitely massive variety of attainable “well-structured” random photos we would generate, how will we get one which’s of a cat in a celebration hat? Nicely, a primary query is: how would we all know if we’ve succeeded? As people, we may simply look and see what our picture is of. However it seems we will additionally prepare a neural internet to do that (and, no, it doesn’t all the time get it precisely proper):

How is the neural internet skilled? The fundamental thought is to take billions of photos—say from the online—for which corresponding captions have been supplied. Then one progressively tweaks the parameters of the neural internet to make it reproduce these captions when it’s fed the corresponding photos. However the essential level is the neural internet seems to do extra: it additionally efficiently produces “affordable” captions for photos it’s by no means seen earlier than. What does “affordable” imply? Operationally, it means captions which are just like what we people may assign. And, sure, it’s removed from apparent {that a} computationally constructed neural internet will behave in any respect like us people, and the truth that it does is presumably telling us elementary issues about how human brains work.

However for now what’s vital is that we will use this captioning functionality to progressively information photos we produce in the direction of what we would like. Begin from “pure randomness”. Then attempt to “construction the randomness” to make a “affordable” image, however at each step see in impact “what the caption can be”. And attempt to “go in a path” that “leads in the direction of” an image with the caption we would like. Or, in different phrases, progressively attempt to get to an image that’s of what we would like.

The way in which that is arrange in apply, one begins from an array of random pixels, then iteratively kinds the image one needs:

Completely different preliminary arrays result in totally different last footage—although if every part works appropriately, the ultimate footage will all be of “what one requested for”, on this case a cat in a celebration hat (and, sure, there are just a few “glitches”):

We don’t understand how psychological photos are fashioned in human brains. However it appears conceivable that the method just isn’t too totally different. And that in impact as we’re attempting to “conjure up an affordable picture”, we’re regularly checking if it’s aligned with what we would like—in order that, for instance, if our checking course of is impaired we will find yourself with a distinct picture, as in hemispatial neglect.

The Notion of Interconcept House

That every part can finally be represented by way of digital knowledge is foundational to the entire computational paradigm. However the effectiveness of neural nets depends on the marginally totally different concept that it’s helpful to deal with a minimum of many sorts of issues as being characterised by arrays of actual numbers. Ultimately one may extract from a neural internet that’s giving captions to pictures the phrase “cat”. However contained in the neural internet it’ll function with arrays of numbers that correspond in some pretty summary technique to the picture you’ve given, and the textual caption it’ll lastly produce.

And generally neural nets can sometimes be considered associating “function vectors” with issues—whether or not these issues are photos, textual content, or the rest. However whereas phrases like “cat” and “canine” are discrete, the function vectors related to them simply include collections of actual numbers. And which means that we will assume of a complete house of prospects, with “cat” and “canine” simply corresponding to 2 particular factors.

So what’s on the market in that house of prospects? For the function vectors we sometimes take care of in apply the house is many-thousand-dimensional. However we will for instance take a look at the (nominally straight) line from the “canine level” to the “cat level” on this house, and even generate pattern photos of what comes between:

And, sure, if we need to, we will maintain going “past cat”—and fairly quickly issues begin changing into fairly bizarre:

We will additionally do issues like take a look at the road from a aircraft to a cat—and, sure, there’s unusual stuff in there (wings ![]() hat

hat ![]() ears?):

ears?):

What about elsewhere? For instance, what occurs “round” our customary “cat in a celebration hat”? With the explicit setup we’re utilizing, there’s a 2304-dimensional house of prospects. However for example, we take a look at what we get on a specific 2D aircraft via the “customary cat” level:

Our “customary cat” is within the center. However as we transfer away from the “customary cat” level, progressively weirder issues occur. For some time there are recognizable (if maybe demonic) cats to be seen. However quickly there isn’t a lot “catness” in proof—although typically hats do stay (in what we would characterize as an “all hat, no cat” scenario, harking back to the Texan “all hat, no cattle”).

How about if we choose different planes via the usual cat level? All types of photos seem:

However the elementary story is all the time the identical: there’s a form of “cat island”, past which there are bizarre and solely vaguely cat-related photos—encircled by an “ocean” of what appear to be purely summary patterns with no apparent cat connection. And generally the image that emerges is that within the immense house of attainable “statistically affordable” photos, there are islands dotted round that correspond to “linguistically describable ideas”—like cats in celebration hats.

The islands usually appear to be roughly “spherical”, within the sense that they lengthen about the identical nominal distance in each path. However relative to the entire house, every island is completely tiny—one thing like maybe a fraction 2–2000 ≈ 10–600 of the quantity of the entire house. And between these islands there lie large expanses of what we would name “interconcept house”.

What’s on the market in interconcept house? It’s filled with photos which are “statistically affordable” based mostly on the photographs we people have put on the internet, and so on.—however aren’t of issues we people have provide you with phrases for. It’s as if in growing our civilization—and our human language—we’ve “colonized” solely sure small islands within the house of all attainable ideas, leaving huge quantities of interconcept house unexplored.

What’s out there’s fairly bizarre—and typically a bit disturbing. Right here’s what we see zooming in on the identical (randomly chosen) aircraft round “cat island” as above:

What are all this stuff? In a way, phrases fail us. They’re issues on the shores of interconcept house, the place human expertise has not (but) taken us, and for which human language has not been developed.

What if we enterprise additional out into interconcept house—and for instance simply pattern factors within the house at random? It’s similar to we already noticed above: we’ll get photos which are by some means “statistically typical” of what we people have put on the internet, and so on., and on which our AI was skilled. Listed below are just a few extra examples:

And, sure, we will pick a minimum of two fundamental lessons of photos: ones that appear like “pure summary textures”, and ones that appear “representational”, and remind us of real-world scenes from human expertise. There are intermediate instances—like “textures” with buildings that appear like they could “symbolize one thing”, and “representational-seeming” photos the place we simply can’t place what they may be representing.

However once we do see recognizable “real-world-inspired” photos they’re a curious reflection of the ideas—and normal imagery—that we people discover “attention-grabbing sufficient to place on the internet”. We’re not dealing right here with some form of “arbitrary interconcept house”; we’re coping with “human-aligned” interconcept house that’s in a way anchored to human ideas, however extends between and round them. And, sure, considered in these phrases it turns into fairly unsurprising that within the interconcept house we’re sampling, there are such a lot of photos that remind us of human kinds and customary human conditions.

However simply what had been the photographs that the AI noticed, from which it fashioned this mannequin of interconcept house? There have been a few billion of them, “foraged” from the online. Like issues on the internet generally, it’s a motley assortment; right here’s a random pattern:

Some might be considered capturing facets of “life as it’s”, however many are extra aspirational, coming from staged and infrequently promotionally oriented images. And, sure, there are many Internet-a-Porter-style “clothing-without-heads” photos. There are additionally numerous photos of “issues”—like meals, and so on. However by some means once we pattern randomly in interconcept house it’s the human kinds that almost all distinctively stand out, conceivably as a result of “issues” will not be notably constant of their construction, however human kinds all the time have a sure consistency of “head-body-arms, and so on.” construction.

It’s notable, although, that even probably the most real-world-like photos we discover by randomly sampling interconcept house appear to sometimes be “painterly” and “creative” quite than “photorealistic” and “photographic”. It’s a distinct story near “idea factors”—like on cat island. There extra photographic kinds are frequent, although as we go away from the “precise idea level”, there’s an inclination in the direction of both a quite toy-like look, or one thing extra like an illustration.

By the way in which, even probably the most “photographic” photos the AI generates gained’t be something that comes immediately from the coaching set. As a result of—as we’ll talk about later—the AI just isn’t set as much as immediately retailer photos; as a substitute its coaching course of in impact “grinds up” photos to extract their “statistical properties”. And whereas “statistical options” of the unique photos will present up in what the AI generates, any detailed association of pixels in them is overwhelmingly unlikely to take action.

However, OK, what occurs if we begin not at a “describable idea” (like “a cat in a celebration hat”), however simply at a random level in interconcept house? Listed below are the sorts of issues we see:

The pictures usually appear to be a bit extra various than these round “recognized idea factors” (like our “cat level” above). And infrequently there’ll be a “flash” of one thing “representationally acquainted” (maybe like a human type) that’ll present up. However more often than not we gained’t be capable of say “what these photos are of”. They’re of issues which are by some means “statistically” like what we’ve seen, however they’re not issues which are acquainted sufficient that we’ve—a minimum of to this point—developed a technique to describe them, say with phrases.

The Photographs of Interconcept House

There’s one thing surprisingly acquainted—but unfamiliar—to most of the photos in interconcept house. It’s pretty frequent to see footage that appear like they’re of individuals:

However they’re “not fairly proper”. And for us as people, being notably attuned to faces, it’s the faces that have a tendency to look probably the most flawed—although different components are “flawed” as nicely.

And maybe in commentary on our nature as a social species (or possibly it’s as a social media species), there’s a terrific tendency to see pairs or bigger teams of individuals:

There’s additionally an odd preponderance of torso-only footage—presumably the results of “trend photographs” within the coaching knowledge (and, sure, with some quite wild “trend statements”):

Persons are by far the most typical identifiable components. However one does typically see different issues too:

Then there are some landscape-type scenes:

Some look pretty photographically literal, however others construct up the impression of landscapes from extra summary components:

Sometimes there are cityscape-like footage:

And—nonetheless extra not often—indoor-like scenes:

Then there are footage that appear to be they’re “exteriors” of some form:

It’s frequent to see photos constructed up from strains or dots or in any other case “impressionistically fashioned”:

After which there are many photos of that appear like they’re attempting to be “of one thing”, however it’s under no circumstances clear what that “factor” is, and whether or not certainly it’s one thing we people would acknowledge, or whether or not as a substitute it’s one thing by some means “basically alien”:

It’s additionally fairly frequent to see what look extra like “pure patterns”—that don’t actually appear to be they’re “attempting to be issues”, however extra come throughout like “ornamental textures”:

However in all probability the only most typical kind of photos are considerably uniform textures, fashioned by repeating varied easy components, although normally with “dislocations” of varied sorts:

Throughout interconcept house there’s great selection to the photographs we see. Many have a sure creative high quality to them—and a sense that they’re some form of “aware interpretation” of a maybe mundane factor on the earth, or a easy, basically mathematical sample. And to some extent the “thoughts” concerned is a collective model of our human one, mirrored in a neural internet that has “skilled” a number of the many photos people have put on the internet, and so on. However in some methods the thoughts can also be a extra alien one, fashioned from the computational construction of the neural internet, with its explicit options, and little doubt in some methods computationally irreducible conduct.

And certainly there are some motifs that present up repeatedly which are presumably reflections of options of the underlying construction of the neural internet. The “granulated” look, with alternation between mild and darkish, for instance, is presumably a consequence of the dynamics of the convolutional components of the neural internet—and analogous to the outcomes of what quantities to iterated blurring and sharpening with a sure efficient pixel scale (reminiscent, for instance, of video suggestions):

Making Minds Alien

We will consider what we’ve performed as far as exploring what a thoughts skilled from human-like experiences can “think about” by generalizing from these experiences. However what may a distinct form of thoughts think about?

As a really tough approximation, we will consider simply taking the skilled “thoughts” we’ve created, and explicitly modifying it, then seeing what it now “imagines”. Or, extra particularly, we will take the neural internet we have now been utilizing, and begin making modifications to it, and seeing what impact that has on the photographs it produces.

We’ll talk about later the main points of how the community is about up, however suffice it to say right here that it entails 391 distinct inside modules, involving altogether practically a billion numerical weights. When the community is skilled, these numerical weights are rigorously tuned to attain the outcomes we would like. However what if we simply change them? We’ll nonetheless (usually) get a community that may generate photos. However in some sense it’ll be “considering in another way”—so doubtlessly the photographs might be totally different.

In order a really coarse first experiment—harking back to many which are performed in biology—let’s simply “knock out” every successive module in flip, setting all its weights to zero. If we ask the ensuing community to generate an image of “a cat in a celebration hat”, right here’s what we now get:

Let’s take a look at these leads to a bit extra element. In fairly just a few instances, zeroing out a single module doesn’t make a lot of a distinction; for instance, it would mainly solely change the facial features of the cat:

However it could additionally extra basically change the cat (and its hat):

It might change the configuration or place of the cat (and, sure, a few of these paws will not be anatomically right):

Zeroing out different modules can in impact change the “rendering” of the cat:

However in different instances issues can get way more combined up, and troublesome for us to parse:

Typically there’s clearly a cat there, however its presentation is at finest odd:

And typically we get photos which have particular construction, however don’t appear to have something to do with cats:

Then there are instances the place we mainly simply get “noise”, albeit with issues superimposed:

However—very similar to in neurophysiology—there are some modules (just like the very first and final ones in our authentic checklist) the place zeroing them out mainly makes the system not work in any respect, and simply generate “pure random noise”.

As we’ll talk about beneath, the entire neural internet that we’re utilizing has a reasonably advanced inside construction—for instance, with just a few basically totally different sorts of modules. However right here’s a pattern of what occurs if one zeros out modules at totally different locations within the community—and what we see is that for probably the most half there’s no apparent correlation between the place the module is, and what impact zeroing it out could have:

To date, we’ve simply checked out what occurs if we zero out a single module at a time. Listed below are some randomly chosen examples of what occurs if one zeros out successively extra modules (one may name this a “HAL experiment” in remembrance of the destiny of the fictional HAL AI within the film 2001):

And mainly as soon as the “catness” of the photographs is misplaced, issues turn into increasingly more alien from there on out, ending both in obvious randomness, or typically barren “zeroness”.

Reasonably than zeroing out modules, we will as a substitute randomize the weights in them (maybe a bit just like the impact of a tumor quite than a stroke in a mind)—however the outcomes are normally a minimum of qualitatively related:

One thing else we will do is simply to progressively combine randomness uniformly into each weight within the community (maybe a bit like globally “drugging” a mind). Listed below are three examples the place in every case 0%, 1%, 2%, … of randomness was added—all “fading away” in a really related approach:

And equally, we will progressively scale down in the direction of zero (in 1% increments: 100%, 99%, 98%, …) all of the weights within the community:

Or we will progressively improve the numerical values of the weights—ultimately in some sense “blowing the thoughts” of the community (and going a bit “psychedelic” within the course of):

Minds in Rulial House

We will consider what we’ve performed as far as exploring a number of the “pure historical past” of what’s on the market in generative AI house—or as offering a small style of a minimum of one approximation to the form of psychological imagery one may encounter in alien minds. However how does this match right into a extra normal image of alien minds and what they may be like?

With the idea of the ruliad we lastly have a principled technique to speak about alien minds—a minimum of at a theoretical stage. And the important thing level is that any alien thoughts—or, for that matter, any thoughts—might be considered “observing” or sampling the ruliad from its personal explicit perspective, or in impact, its personal place in rulial house.

The ruliad is outlined to be the entangled restrict of all attainable computations: a singular object with an inevitable construction. And the concept is that something—whether or not one interprets it as a phenomenon or an observer—have to be a part of the ruliad. The important thing to our Physics Undertaking is then that “observers like us” have sure normal traits. We’re computationally bounded, with “finite minds” and restricted sensory enter. And we have now a sure coherence that comes from our perception in our persistence in time, and our constant thread of expertise. And what we then uncover in our Physics Undertaking is the quite outstanding consequence that from these traits and the overall properties of the ruliad alone it’s basically inevitable that we should understand the universe to exhibit the elemental bodily legal guidelines it does, specifically the three large theories of twentieth-century physics: normal relativity, quantum mechanics and statistical mechanics.

However what about extra detailed facets of what we understand? Nicely, that can depend upon extra detailed facets of us as observers, and of how our minds are arrange. And in a way, every totally different attainable thoughts might be considered present in a sure place in rulial house. Completely different human minds are principally shut in rulial house, animal minds additional away, and extra alien minds nonetheless additional. However how can we characterize what these minds are “interested by”, or how these minds “understand issues”?

From inside our personal minds we will type a way of what we understand. However we don’t actually have good methods to reliably probe what one other thoughts perceives. However what about what one other thoughts imagines? Nicely, that’s the place what we’ve been doing right here is available in. As a result of with generative AI we’ve obtained a mechanism for exposing the “psychological imagery” of an “AI thoughts”.

We may take into account doing this with phrases and textual content, say with an LLM. However for us people photos have a sure fluidity that textual content doesn’t. Our eyes and brains can completely nicely “see” and soak up photos even when we don’t “perceive” them. However it’s very troublesome for us to soak up textual content that we don’t “perceive”; it normally tends to look similar to a form of “phrase soup”.

However, OK, so we generate “psychological imagery” from “minds” which have been “made alien” by varied modifications. How come we people can perceive something such minds make? Nicely, it’s bit like one particular person with the ability to perceive the ideas of one other. Their brains—and minds—are constructed in another way. And their “inside view” of issues will inevitably be totally different. However the essential thought—that’s for instance central to language—is that it’s attainable to “package deal up” ideas into one thing that be “transported” to a different thoughts. No matter some explicit inside thought may be, by the point we will specific it with phrases in a language, it’s attainable to speak it to a different thoughts that can “unpack” it into totally different inside ideas.

It’s a nontrivial truth of physics that “pure movement” in bodily house is feasible; in different phrases, that an “object” might be moved “with out change” from one place in bodily house to a different. And now, in a way, we’re asking about pure movement in rulial house: can we transfer one thing “with out change” from one thoughts at one place in rulial house to a different thoughts at one other place? In bodily house, issues like particles—in addition to issues like black holes—are the elemental components which are imagined to maneuver with out change. So what’s now the analog in rulial house? It appears to be ideas—as usually, for instance, represented by phrases.

So what does that imply for our exploration of generative AI “alien minds”? We will ask whether or not once we transfer from one doubtlessly alien thoughts to a different ideas are preserved. We don’t have an ideal proxy for this (although we may make a greater one by appropriately coaching neural internet classifiers). However as a primary approximation that is like asking whether or not as we “change the thoughts”—or transfer in rulial house—we will nonetheless acknowledge the “idea” the thoughts produces. Or, in different phrases, if we begin with a “thoughts” that’s producing a cat in a celebration hat, will we nonetheless acknowledge the ideas of cat or hat in what a “modified thoughts” produces?

And what we’ve seen is that typically we do, and typically we don’t. And for instance once we checked out “cat island” we noticed a sure boundary past which we may not acknowledge “catness” within the picture that was produced. And by finding out issues like cat island (and notably its analogs when not simply the “immediate” but in addition the underlying neural internet is modified) it needs to be attainable to map out how far ideas “lengthen” throughout alien minds.

It’s additionally attainable to consider a form of inverse query: simply what’s the extent of a thoughts in rulial house? Or, in different phrases, what vary of factors of view, finally in regards to the ruliad, can it maintain? Will or not it’s “narrow-minded”, in a position to assume solely specifically methods, with explicit ideas? Or will or not it’s extra “broad-minded”, encompassing extra methods of considering, with extra ideas?

In a way the entire arc of the mental improvement of our civilization might be considered comparable to an growth in rulial house: with us progressively with the ability to assume in new methods, and about new issues. And as we increase in rulial house, we’re in impact encompassing extra of what we beforehand would have needed to take into account the area of an alien thoughts.

After we take a look at photos produced by generative AI away from the specifics of human expertise—say in interconcept house, or with modified guidelines of technology—we could at first be capable of make little from them. Like inkblots or preparations of stars we’ll usually discover ourselves eager to say that what we see appears like this or that factor we all know.

However the true query is whether or not we will devise a way of describing what we see that enables us to construct ideas on what we see, or “cause” about it. And what’s very typical is that we handle to do that once we provide you with a normal “symbolic description” of what we see, say captured with phrases in pure language (or, now, computational language). Earlier than we have now these phrases, or that symbolic description, we’ll have a tendency simply to not soak up what we see.

And so, for instance, although nested patterns have all the time existed in nature, and had been even explicitly created by mosaic artisans within the early 1200s, they appear to have by no means been systematically observed or mentioned in any respect till the latter a part of the twentieth century, when lastly the framework of “fractals” was developed for speaking about them.

And so it might be with most of the kinds we’ve seen right here. As of at the moment, we have now no title for them, no systematic framework for interested by them, and no cause to view them as vital. However notably if the issues we do repeatedly present us such kinds, we’ll ultimately provide you with names for them, and begin incorporating them into the area that our minds cowl.

And in a way what we’ve performed right here might be considered exhibiting us a preview of what’s on the market in rulial house, in what’s at the moment the area of alien minds. Within the normal exploration of ruliology, and the investigation of what arbitrary easy packages within the computational universe do, we’re in a position to soar far throughout the ruliad. However it’s typical that what we see just isn’t one thing we will connect with issues we’re aware of. In what we’re doing right here, we’re transferring solely a lot smaller distances in rulial house. We’re ranging from generative AI that’s intently aligned with present human improvement—having been skilled from photos that we people have put on the internet, and so on. However then we’re making small modifications to our “AI thoughts”, and taking a look at what it now generates.

What we see is commonly shocking. However it’s nonetheless shut sufficient to the place we “at the moment are” in rulial house that we will—a minimum of to some extent—soak up and cause about what we’re seeing. Nonetheless, the photographs usually don’t “make sense” to us. And, sure, fairly probably the AI has invented one thing that has a wealthy and “significant” internal construction. However it’s simply that we don’t (but) have a technique to speak about it—and if we did, it might instantly “make excellent sense” to us.

So if we see one thing we don’t perceive, can we simply “prepare a translator”? At some stage the reply have to be sure. As a result of the Precept of Computational Equivalence implies that finally there’s a elementary uniformity to the ruliad. However the issue is that the translator is more likely to should do an irreducible quantity of computational work. And so it gained’t be implementable by a “thoughts like ours”. Nonetheless, although we will’t create a “normal translator” we will count on that sure options of what we see will nonetheless be translatable—in impact by exploiting sure pockets of computational reducibility that should essentially exist even when the system as a complete is stuffed with computational irreducibility. And operationally what this implies in our case is that the AI could in impact have discovered sure regularities or patterns that we don’t occur to have observed however which are helpful in exploring farther from the “present human level” in rulial house.

It’s very difficult to get an intuitive understanding of what rulial house is like. However the method we’ve taken right here is for me a promising first effort in “humanizing” rulial house, and seeing simply how we would be capable of relate to what’s to this point the area of alien minds.

Appendix: How Does the Generative AI Work?

In the primary a part of this piece, we’ve principally simply talked about what generative AI does, not the way it works inside. Right here I’ll go somewhat deeper into what’s inside the actual kind of generative AI system that I’ve utilized in my explorations. It’s a technique known as secure diffusion, and its operation is in some ways each intelligent and shocking. Because it’s applied at the moment it’s steeped in pretty difficult engineering particulars. To what extent these will finally be needed isn’t clear. However in any case right here I’ll principally focus on normal ideas, and on giving a broad define of how generative AI can be utilized to provide photos.

The Distribution of Typical Photographs

On the core of generative AI is the power to provide issues of some explicit kind that “comply with the patterns of” recognized issues of that kind. So, for instance, massive language fashions (LLMs) are meant to provide textual content that “follows the patterns” of textual content written by people, say on the internet. And generative AI methods for photos are equally meant to provide photos that “comply with the patterns” of photos put on the internet, and so on.

However what sorts of patterns exist in typical photos, say on the internet? Listed below are some examples of “typical photos”—scaled right down to 32×32 pixels and brought from a customary set of 60,000 photos:

And as a really very first thing, we will ask what colours present up in these photos. They’re not uniform in RGB house:

However what in regards to the positions of various colours? Adjusting to intensify coloration variations, the “common picture” seems to have a curious “HAL’s eye” look (presumably with blue for sky on the prime, and brown for earth on the backside):

However simply selecting pixels individually—even with the colour distribution inferred from precise photos—gained’t produce photos that in any approach look “pure” or “sensible”:

And the instant situation is that the pixels aren’t actually impartial; most pixels in most photos are correlated in coloration with close by pixels. And in a primary approximation one can seize this for instance by becoming the checklist of colours of all of the pixels to a multivariate Gaussian distribution with a covariance matrix that represents their correlation. Sampling from this distribution offers photos like these—that certainly look by some means “statistically pure”, even when there isn’t acceptable detailed construction in them:

So, OK, how can one do higher? The fundamental thought is to make use of neural nets, which may in impact encode detailed long-range connections between pixels. Ultimately it’s just like what’s performed in LLMs like ChatGPT—the place one has to take care of long-range connections between phrases in textual content. However for photos it’s structurally a bit harder, as a result of in some sense one has to “persistently match collectively 2D patches” quite than simply progressively lengthen a 1D sequence.

And the everyday approach that is performed at first appears a bit weird. The fundamental thought is to start out with a random array of pixels—corresponding in impact to “pure noise”—after which progressively to “cut back the noise” to finish up with a “affordable picture” that follows the patterns of typical photos, all of the whereas guided by some immediate that claims what one needs the “affordable picture” to be of.

Attractors and Inverse Diffusion

How does one go from randomness to particular “affordable” issues? The bottom line is to make use of the notion of attractors. In a quite simple case, one may need a system—like this “mechanical” instance—the place from any “randomly chosen” preliminary situation one additionally evolves to one in all (right here) two particular (fixed-point) attractors:

One has one thing related in a neural internet that’s for instance skilled to acknowledge digits:

No matter precisely how every digit is written, or noise that will get added to it, the community will take this enter and evolve to an attractor comparable to a digit.

Typically there might be numerous attractors. Like in this (“class 2”) mobile automaton evolving down the web page, many various preliminary situations can result in the identical attractor, however there are a lot of attainable attractors, comparable to totally different last patterns of stripes:

The identical might be true for instance in 2D mobile automata, the place now the attractors might be considered being totally different “photos” with construction decided by the mobile automaton rule:

However what if one needs to rearrange to have explicit photos as attractors? Right here’s the place the considerably shocking thought of “secure diffusion” can be utilized. Think about we begin with two attainable photos, ![]() and

and ![]() , after which in a sequence of steps progressively add noise to them:

, after which in a sequence of steps progressively add noise to them:

Right here’s the weird factor we now need to do: prepare a neural internet to take the picture we get at a specific step, and “go backwards”, eradicating noise from it. The neural internet we’ll use for that is considerably difficult, with “convolutional” items that mainly function on blocks of close by pixels, and “transformers” that get utilized with sure weights to extra distant pixels. Schematically in Wolfram Language the community appears at a excessive stage like this:

And roughly what it’s doing is to make an informationally compressed model of every picture, after which to increase it once more (via what’s normally known as a “U-net” neural internet). We begin with an untrained model of this community (say simply randomly initialized). Then we feed it a few million examples of noisy footage of ![]() and

and ![]() , and the denoised outputs we would like in every case.

, and the denoised outputs we would like in every case.

Then if we take the skilled neural internet and successively apply it, for instance, to a “noised ![]() ”, the web will “appropriately” decide that the “denoised” model is a “pure

”, the web will “appropriately” decide that the “denoised” model is a “pure ![]() ”:

”:

However what if we apply this community to pure noise? The community has been set as much as all the time ultimately evolve both to the “![]() ” attractor or the “

” attractor or the “![]() ” attractor. However which it “chooses” in a specific case will depend upon the main points of the preliminary noise—so in impact the community will appear to be selecting at random to “fish” both “

” attractor. However which it “chooses” in a specific case will depend upon the main points of the preliminary noise—so in impact the community will appear to be selecting at random to “fish” both “![]() ” or “

” or “![]() ” out of the noise:

” out of the noise:

How does this apply to our authentic objective of producing photos “like” these discovered for instance on the internet? Nicely, as a substitute of simply coaching our “denoising” (or “inverse diffusion”) community on a few “goal” photos, let’s think about we prepare it on billions of photos from the online. And let’s additionally assume that our community isn’t sufficiently big to retailer all these photos in any form of express approach.

Within the summary it’s not clear what the community will do. However the outstanding empirical truth is that it appears to handle to efficiently generate (“from noise”) photos that “comply with the overall patterns” of the photographs it was skilled from. There isn’t any clear technique to “formally validate” this success. It’s actually only a matter of human notion: to us the photographs (usually) “look proper”.

It could possibly be that with a distinct (alien?) system of notion we’d instantly see “one thing flawed” with the photographs. However for functions of human notion, the neural internet appears to offer “reasonable-looking” photos—maybe not least as a result of the neural internet operates a minimum of roughly like our brains and our processes of notion appear to function.

Injecting a Immediate

We’ve described how a denoising neural internet appears to have the ability to begin from some configuration of random noise and generate a “reasonable-looking” picture. And from any explicit configuration of noise, a given neural internet will all the time generate the identical picture. However there’s no technique to inform what that picture might be of; it’s simply one thing to empirically discover, as we did above.

However what if we need to “information” the neural internet to generate a picture that we’d describe as being of a particular factor, like “a cat in a celebration hat”? We may think about “regularly checking” whether or not the picture we’re producing can be acknowledged by a neural internet as being of what we wished. And conceptually that’s what we will do. However we additionally want a technique to “redirect” the picture technology if it’s “not entering into the correct path”. And a handy approach to do that is to combine a “description of what we would like” proper into the denoising coaching course of. Specifically, if we’re coaching to “recuperate an ![]() ”, combine an outline of the “

”, combine an outline of the “![]() ” proper alongside the picture of the “

” proper alongside the picture of the “![]() ”.

”.

And right here we will make use of a key function of neural nets: that finally they function on arrays of (actual) numbers. So whether or not they’re coping with photos composed of pixels, or textual content composed of phrases, all this stuff ultimately should be “floor up” into arrays of actual numbers. And when a neural internet is skilled, what it’s finally “studying” is simply the right way to appropriately rework these “disembodied” arrays of numbers.

There’s a reasonably pure technique to generate an array of numbers from a picture: simply take the triples of crimson, inexperienced and blue depth values for every pixel. (Sure, we may choose a distinct detailed illustration, however it’s not more likely to matter—as a result of the neural internet can all the time successfully “be taught a conversion”.) However what a few textual description, like “a cat in a celebration hat”?

We have to discover a technique to encode textual content as an array of numbers. And truly LLMs face the identical situation, and we will resolve it in mainly the identical approach right here as LLMs do. Ultimately what we would like is to derive from any piece of textual content a “function vector” consisting of an array of numbers that present some form of illustration of the “efficient which means” of the textual content, or a minimum of the “efficient which means” related to describing photos.

Let’s say we prepare a neural internet to breed associations between photos and captions, as discovered for instance on the internet. If we feed this neural internet a picture, it’ll attempt to generate a caption for the picture. If we feed the neural internet a caption, it’s not sensible for it to generate a complete picture. However we will take a look at the innards of the neural internet and see the array of numbers it derived from the caption—after which use this as our function vector. And the concept is that as a result of captions that “imply the identical factor” needs to be related within the coaching set with “the identical form of photos”, they need to have related function vectors.

So now let’s say we need to generate an image of a cat in a celebration hat. First we discover the function vector related to the textual content “a cat in a celebration hat”. Then that is what we maintain mixing in at every stage of denoising to information the denoising course of, and find yourself with a picture that the picture captioning community will determine as “a cat in a celebration hat”.

The Latent House “Trick”

Essentially the most direct technique to do “denoising” is to function immediately on the pixels in a picture. However it turns on the market’s a significantly extra environment friendly method, which operates not on pixels however on “options” of the picture—or, extra particularly, on a function vector which describes a picture.

In a “uncooked picture” offered by way of pixels, there’s loads of redundancy—which is why, for instance, picture codecs like JPEG and PNG handle to compress uncooked photos a lot with out even noticeably modifying them for functions of typical human notion. However with neural nets it’s attainable to do a lot larger compression, notably if all we need to do is to protect the “which means” of a picture, with out worrying about its exact particulars.

And actually as a part of coaching a neural internet to affiliate photos with captions, we will derive a “latent illustration” of photos, or in impact a function vector that captures the “vital options” of the picture. After which we will do every part we’ve mentioned to this point immediately on this latent illustration—decoding it solely on the finish into the precise pixel illustration of the picture.

So what does it appear to be to construct up the latent illustration of a picture? With the actual setup we’re utilizing right here, it seems that the function vector within the latent illustration nonetheless preserves the fundamental spatial association of the picture. The “latent pixels” are a lot coarser than the “seen” ones, and occur to be characterised by 4 numbers quite than the three for RGB. However we will decode issues to see the “denoising” course of occurring by way of “latent pixels”:

After which we will take the latent illustration we get, and as soon as once more use a skilled neural internet to fill in a “decoding” of this by way of precise pixels, getting out our last generated picture.

An Analogy in Easy Packages

Generative AI methods work by having attractors which are rigorously constructed via coaching in order that they correspond to “affordable outputs”. A big a part of what we’ve performed above is to review what occurs to those attractors once we change the inner parameters of the system (neural internet weights, and so on.). What we’ve seen has been difficult, and, certainly, usually fairly “alien wanting”. However is there maybe a less complicated setup by which we will see related core phenomena?

By the point we’re interested by creating attractors for sensible photos, and so on. it’s inevitable that issues are going to be difficult. However what if we take a look at methods with a lot easier setups? For instance, take into account a dynamical system whose state is characterised simply by a single quantity—equivalent to an iterated map on the interval, like x ![]() a x (1 – x).

a x (1 – x).

Ranging from a uniform array of attainable x values, we will present down the web page which values of x are achieved at successive iterations:

For a = 2.9, the system evolves from any preliminary worth to a single attractor, which consists of a single mounted last worth. But when we alter the “inside parameter” a to three.1, we now get two distinct last values. And on the “bifurcation level” a = 3 there’s a sudden change from one to 2 distinct last values. And certainly in our generative AI system it’s pretty frequent to see related discontinuous modifications in conduct even when an inside parameter is repeatedly modified.

As one other instance—barely nearer to picture technology—take into account (as above) a 1D mobile automaton that reveals class 2 conduct, and evolves from any preliminary state to some mounted last state that one can consider as an attractor for the system:

Which attractor one reaches is dependent upon the preliminary situation one begins from. However—in analogy to our generative AI system—we will consider all of the attractors as being “affordable outputs” for the system. However now what occurs if we alter the parameters of the system, or on this case, the mobile automaton rule? Specifically, what is going to occur to the attractors? It’s like what we did above in altering weights in a neural internet—however lots easier.

The actual rule we’re utilizing right here has 4 attainable colours for every cell, and is outlined by simply 64 discrete values from 0 to three. So let’s say we randomly change simply a kind of values at a time. Listed below are some examples of what we get, all the time ranging from the identical preliminary situation as within the first image above:

With a few exceptions these appear to provide outcomes which are a minimum of “roughly related” to what we obtained with out altering the rule. In analogy to what we did above, the cat may need modified, however it’s nonetheless kind of a cat. However let’s now attempt “progressive randomization”, the place we modify successively extra values within the definition of the rule. For some time we once more get “roughly related” outcomes, however then—very similar to in our cat examples above—issues ultimately “collapse” and we get “way more random” outcomes:

One vital distinction between “secure diffusion” and mobile automata is that whereas in mobile automata, the evolution can result in continued change perpetually, in secure diffusion there’s an annealing course of used that all the time makes successive steps “progressively smaller”—and basically forces a set level to be reached.

However however this, we will attempt to get a more in-depth analogy to picture technology by wanting (once more as above) at 2D mobile automata. Right here’s an instance of the (not-too-exciting-as-images) “last states” reached from three totally different preliminary states in a specific rule:

And right here’s what occurs if one progressively modifications the rule:

At first one nonetheless will get “reasonable-according-to-the-original-rule” last states. But when one modifications the rule additional, issues get “extra alien”, till they give the impression of being to us fairly random.

In altering the rule, one is in impact “transferring in rulial house”. And by taking a look at how this works in mobile automata, one can get a specific amount of instinct. (Adjustments to the rule in a mobile automaton appear a bit like “modifications to the genotype” in biology—with the conduct of the mobile automaton representing the corresponding “phenotype”.) However seeing how “rulial movement” works in a generative AI that’s been skilled on “human-style enter” offers a extra accessible and humanized image of what’s happening, even when it appears nonetheless additional out of attain by way of any form of conventional express formalization.

Thanks

This undertaking is the primary I’ve been in a position to do with our new Wolfram Institute. I thank our Fourmilab Fellow Nik Murzin and Ruliad Fellow Richard Assar for assist. I additionally thank Jeff Arle, Nicolò Monti, Philip Rosedale and the Wolfram Analysis Machine Studying Group.

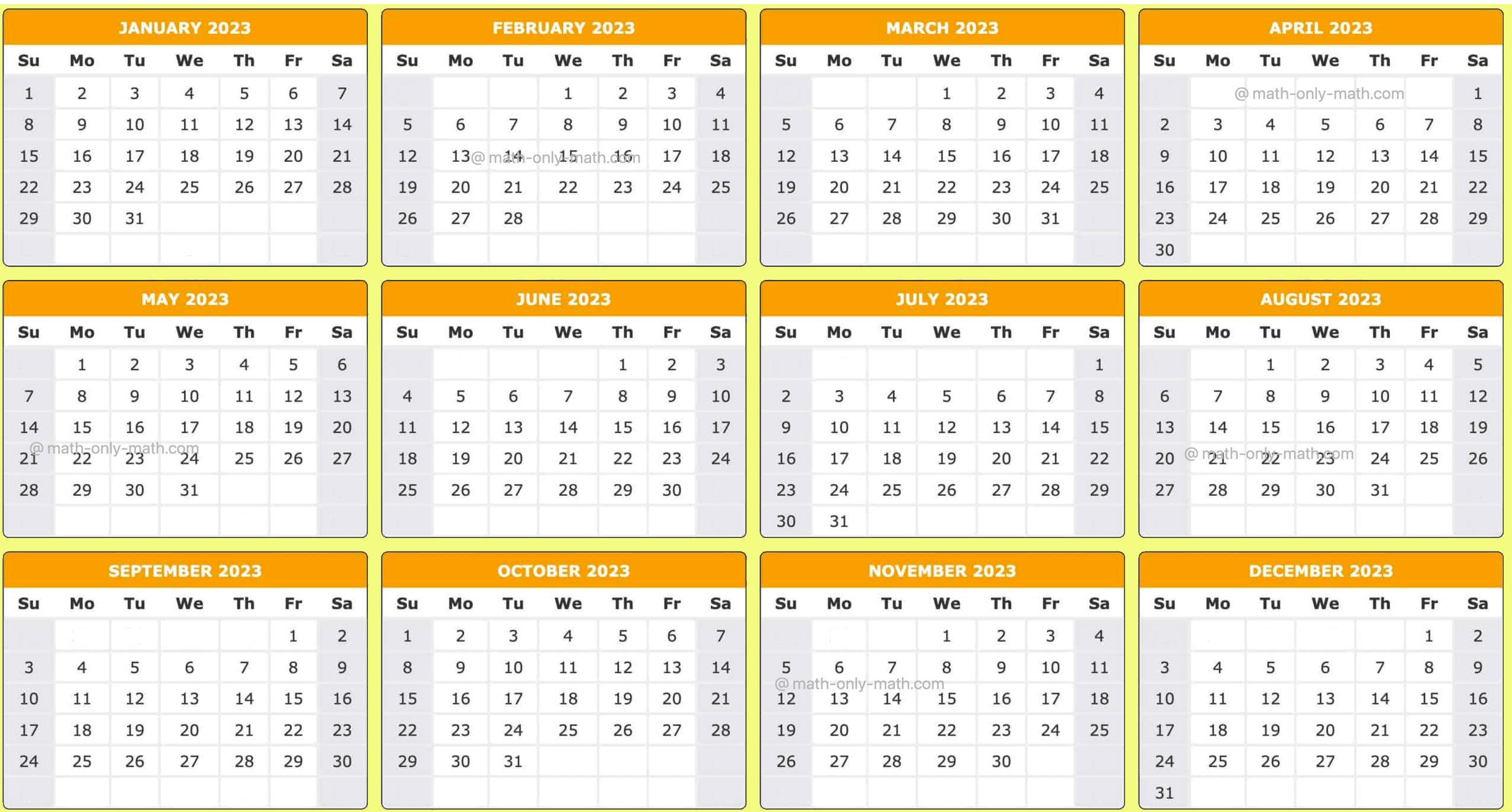

[ad_2]