Launching the Wolfram Immediate Repository—Stephen Wolfram Writings

[ad_1]

Constructing Blocks of “LLM Programming”

Prompts are how one channels an LLM to do one thing. LLMs in a way all the time have a number of “latent functionality” (e.g. from their coaching on billions of webpages). However prompts—in a method that’s nonetheless scientifically mysterious—are what let one “engineer” what a part of that functionality to deliver out.

The performance described right here might be constructed into the upcoming model of Wolfram Language (Model 13.3). To put in it within the now-current model (Model 13.2), use

PacletInstall["Wolfram/Chatbook"]

and

PacletInstall["Wolfram/LLMFunctions"].

Additionally, you will want an API key for the OpenAI LLM or one other LLM.

There are a lot of other ways to make use of prompts. One can use them, for instance, to inform an LLM to “undertake a selected persona”. One can use them to successfully get the LLM to “apply a sure operate” to its enter. And one can use them to get the LLM to border its output in a selected method, or to name out to instruments in a sure method.

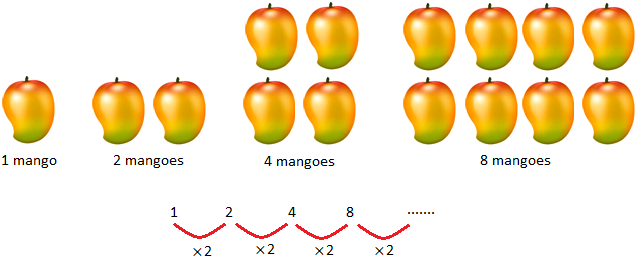

And far as features are the constructing blocks for computational programming—say within the Wolfram Language—so prompts are the constructing blocks for “LLM programming”. And—very like features—there are prompts that correspond to “lumps of performance” that one can count on might be repeatedly used.

At present we’re launching the Wolfram Immediate Repository to supply a curated assortment of helpful community-contributed prompts—set as much as be seamlessly accessible each interactively in Chat Notebooks and programmatically in issues like LLMFunction:

As a primary instance, let’s speak concerning the “Yoda” immediate, that’s listed as a “persona immediate”. Right here’s its web page:

So how can we use this immediate? If we’re utilizing a Chat Pocket book (say obtained from File > New > Chat-Pushed Pocket book) then simply typing @Yoda will “invoke” the Yoda persona:

At a programmatic stage, one can “invoke the persona” via LLMPrompt (the result’s completely different as a result of there’s by default randomness concerned):

|

There are a number of preliminary classes of prompts within the Immediate Repository:

There’s a specific amount of crossover between these classes (and there’ll be extra classes sooner or later—significantly associated to producing computable outcomes, and calling computational instruments). However there are other ways to make use of prompts in several classes.

Perform prompts are all about taking current textual content, and reworking it ultimately. We are able to do that programmatically utilizing LLMResourceFunction:

|

|

We are able to additionally do it in a Chat Pocket book utilizing !ActiveVoiceRephrase, with the shorthand ^ to seek advice from textual content within the cell above, and > to seek advice from textual content within the present chat cell:

Modifier prompts must do with specifying easy methods to modify output coming from the LLM. On this case, the LLM sometimes produces a complete mini-essay:

|

However with the YesNo modifier immediate, it merely says “Sure”:

|

In a Chat Pocket book, you possibly can introduce a modifier immediate utilizing #:

Very often you’ll need a number of modifier prompts:

What Does Having a Immediate Repository Do for One?

LLMs are highly effective issues. And one would possibly surprise why, if one has an outline for a immediate, one can’t simply use that description straight, somewhat than having to retailer a prewritten immediate. Effectively, typically simply utilizing the outline will certainly work positive. However usually it received’t. Generally that’s as a result of one must make clear additional what one desires. Generally it’s as a result of there are not-immediately-obvious nook circumstances to cowl. And typically there’s simply a specific amount of “LLM wrangling” to be carried out. And this all provides as much as the necessity to do at the very least some “immediate engineering” on nearly any immediate.

The YesNo modifier immediate from above is at the moment pretty easy:

|

But it surely’s nonetheless already sophisticated sufficient one which doesn’t need to must repeat it each time one’s attempting to power a sure/no reply. And little question there’ll be subsequent variations of this immediate (that, sure, may have versioning dealt with seamlessly by the Immediate Repository) that may get more and more elaborate, as extra circumstances present up, and extra immediate engineering will get carried out to deal with them.

Lots of the prompts within the Immediate Repository even now are significantly extra sophisticated. Some include typical “normal immediate engineering”, however others include for instance particular data that the LLM doesn’t intrinsically know, or detailed examples that dwelling in on what one desires to have occur.

Within the easiest circumstances, prompts (just like the YesNo one above) are simply plain items of textual content. However usually they include parameters, or have further computational or different content material. And a key characteristic of the Wolfram Immediate Repository is that it will probably deal with this ancillary materials, in the end by representing every part utilizing Wolfram Language symbolic expressions.

As we mentioned in reference to LLMFunction, and so forth. in one other submit, the core “textual” a part of a immediate is represented by a symbolic StringTemplate that instantly permits positional or named parameters. Then there will be an interpreter that applies a Wolfram Language Interpreter operate to the uncooked textual output of the LLM—reworking it from plain textual content to a computable symbolic expression. Extra sophisticatedly, there can be specs of instruments that the LLM can name (represented symbolically as LLMTool constructs), in addition to different details about the required LLM configuration (represented by an LLMConfiguration object). However the important thing level is that every one of that is robotically “packaged up” within the Immediate Repository.

However what truly is the Wolfram Immediate Repository? Effectively, in the end it’s simply a part of the final Wolfram Useful resource System—the identical one which’s used for the Wolfram Perform Repository, Wolfram Knowledge Repository, Wolfram Neural Web Repository, Wolfram Pocket book Archive, and lots of different issues.

And so, for instance, the “Yoda” immediate is ultimately represented by a symbolic ResourceObject that’s a part of the Useful resource System:

|

Open up the show of this useful resource object, and we’ll instantly see varied items of metadata (and a hyperlink to documentation), in addition to the last word canonical UUID of the item:

|

Every thing that should use the immediate—Chat Notebooks, LLMPrompt, LLMResourceFunction, and so forth.—simply works by accessing applicable components of the ResourceObject, in order that for instance the “hero picture” (used for the persona icon) is retrieved like this:

|

There’s a variety of essential infrastructure that “comes at no cost” from the final Wolfram Useful resource System—like environment friendly caching, automated updating, documentation entry, and so forth. And issues like LLMPrompt comply with the very same method as issues like NetModel in having the ability to instantly reference entries in a repository.

What’s within the Immediate Repository So Far

We haven’t been engaged on the Wolfram Immediate Repository for very lengthy, and we’re simply opening it up for outdoor contributions now. However already the Repository accommodates (as of right this moment) about 2 hundred prompts. So what are they to date? Effectively, it’s a spread. From “only for enjoyable”, to very sensible, helpful and typically fairly technical.

Within the “only for enjoyable” class, there are all types of personas, together with:

There are additionally barely extra “sensible” personas—like SupportiveFriend and SportsCoach too—which will be extra useful typically than others:

Then there are “purposeful” ones like NutritionistBot, and so forth.—although most of those are nonetheless very a lot beneath improvement, and can advance significantly when they’re hooked as much as instruments, in order that they’re capable of entry correct computable data, exterior information, and so forth.

However the largest class of prompts to date within the Immediate Repository are operate prompts: prompts which take textual content you provide, and do operations on it. Some are primarily based on simple (at the very least for an LLM) textual content transformations:

There are all types of textual content transformations that may be helpful:

Some operate prompts—like Summarize, TLDR, NarrativeToResume, and so forth.—will be very helpful in making textual content simpler to assimilate. And the identical is true of issues like LegalDejargonize, MedicalDejargonize, ScientificDejargonize, BizDejargonize—or, relying in your background, the *Jargonize variations of those:

Some textual content transformation prompts appear to maybe make use of slightly extra “cultural consciousness” on the a part of the LLM:

Some operate prompts are for analyzing textual content (or, for instance, for doing instructional assessments):

Generally prompts are most helpful once they’re utilized programmatically. Listed here are two synthesized sentences:

|

Now we will use the DocumentCompare immediate to check them (one thing which may, for instance, be helpful in regression testing):

|

There are other forms of “textual content evaluation” prompts, like GlossaryGenerate, CharacterList (characters talked about in a bit of fiction) and LOCTopicSuggest (Library of Congress ebook matters):

There are many different operate prompts already within the Immediate Repository. Some—like FilenameSuggest and CodeImport—are aimed toward doing computational duties. Others make use of commonsense data. And a few are simply enjoyable. However, sure, writing good prompts is tough—and what’s within the Immediate Repository will progressively enhance. And when there are bugs, they are often fairly bizarre. Like PunAbout is meant to generate a pun about some matter, however right here it decides to protest and say it should generate three:

The ultimate class of prompts at the moment within the Immediate Repository are modifier prompts, meant as a option to modify the output generated by the LLM. Generally modifier prompts will be primarily textual:

However usually modifier prompts are meant to create output in a selected type, appropriate, for instance, for interpretation by an interpreter in LLMFunction, and so forth.:

Up to now the modifier prompts within the Immediate Repository are pretty easy. However as soon as there are prompts that make use of instruments (i.e. name again into Wolfram Language through the technology course of) we will count on modifier prompts which are way more refined, helpful and strong.

Including Your Personal Prompts

The Wolfram Immediate Repository is ready as much as be a curated public assortment of prompts the place it’s simple for anybody to submit a brand new immediate. However—as we’ll clarify—you can too use the framework of the Immediate Repository to retailer “personal” prompts, or share them with particular teams.

So how do you outline a brand new immediate within the Immediate Repository framework? The simplest method is to fill out a Immediate Useful resource Definition Pocket book:

You will get this pocket book right here, or from the Submit a Immediate button on the prime of the Immediate Repository web site, or by evaluating CreateNotebook["PromptResource"].

The setup is straight analogous to those for the Wolfram Perform Repository, Wolfram Knowledge Repository, Wolfram Neural Web Repository, and so forth. And when you’ve stuffed out the Definition Pocket book, you’ve received varied decisions:

Undergo Repository sends the immediate to our curation crew for our official Wolfram Immediate Repository; Deploy deploys it in your personal use, and for individuals (or AIs) you select to share it with. For those who’re utilizing the immediate “privately”, you possibly can seek advice from it utilizing its URI or different identifier (should you use ResourceRegister you can too simply seek advice from it by the identify you give it).

OK, so what do you must specify within the Definition Pocket book? An important half is the precise immediate itself. And very often the immediate could be a (rigorously crafted) piece of plain textual content. However in the end—as mentioned elsewhere—a immediate is a symbolic template, that may embrace parameters. And you may insert parameters right into a immediate utilizing “template slots”:

(Template Expression permits you to insert Wolfram Language code that might be evaluated when the immediate is utilized—so you possibly can for instance embrace the present time with Now.)

In easy circumstances, all you’ll have to specify is the “pure immediate”. However in additional refined circumstances you’ll additionally need to specify some “exterior the immediate” data—and there are some sections for this within the Definition Pocket book:

Chat-Associated Options is most related for personas:

You may give an icon that may seem in Chat Notebooks for that persona. And you then may give Wolfram Language features that are to be utilized to the contents of every chat cell earlier than it’s fed to the LLM (“Cell Processing Perform”), and to the output generated by the LLM (“Cell Put up Analysis Perform”). These features are helpful in reworking materials to and from the plain textual content consumed by the LLM, and supporting richer show and computational buildings.

Programmatic Options is especially related for operate prompts, and for the best way prompts are utilized in LLMResourceFunction and so forth.:

There’s “function-oriented documentation” (analogous to what’s used for built-in Wolfram Language features, or for features within the Wolfram Perform Repository). After which there’s the Output Interpreter: a operate to be utilized to the textual output of the LLM, to generate the precise expression that might be returned by LLMResourceFunction, or for formatting in a Chat Pocket book.

What concerning the LLM Configuration part?

The very first thing it does is to outline instruments that may be requested by the LLM when this immediate is used. We’ll talk about instruments in one other submit. However as we’ve talked about a number of instances, they’re a method of getting the LLM name Wolfram Language to get explicit computational outcomes which are then returned to the LLM. The opposite a part of the LLM Configuration part is a extra normal LLMConfiguration specification, which might embrace “temperature” settings, the requirement of utilizing a selected underlying mannequin (e.g. GPT-4), and so forth.

What else is within the Definition Pocket book? There are two fundamental documentation sections: one for Chat Examples, and one for Programmatic Examples. Then there are numerous sorts of metadata.

After all, on the very prime of the Definition Pocket book there’s one other crucial factor: the identify you specify for the immediate. And right here—with the preliminary prompts we’ve put into the Immediate Repository—we’ve began to develop some conventions. Following typical Wolfram Language utilization we’re “camel-casing” names (so it’s “TitleSuggest” not “title counsel”). Then we attempt to use completely different grammatical types for various sorts of prompts. For personas we attempt to use noun phrases (like “Cheerleader” or “SommelierBot”). For features we normally attempt to use verb phrases (like “Summarize” or “HypeUp”). And for modifiers we attempt to use past-tense verb types (like “Translated” or “HaikuStyled”).

The general aim with immediate names—like with bizarre Wolfram Language operate names—is to supply a abstract of what the immediate does, in a type that’s brief sufficient that it seems a bit like a phrase in computational language enter, chats, and so forth.

OK, so let’s say you’ve stuffed out a Definition Pocket book, and also you Deploy it. You’ll get a webpage that features the documentation you’ve given—and appears just about like every of the pages within the Wolfram Immediate Repository. And now if you wish to use the immediate, you possibly can simply click on the suitable place on the webpage, and also you’ll get a copyable model that you would be able to instantly paste into an enter cell, a chat cell, and so forth. (Inside a Chat Pocket book there’s an much more direct mechanism: within the chat icon menu, go to Add & Handle Personas, and once you browse the Immediate Repository, there’ll be an Set up button that may robotically set up a persona.)

A Language of Prompts

LLMs basically cope with pure language of the type we people usually use. However after we arrange a named immediate we’re in a way defining a “higher-level phrase” that can be utilized to “talk” with the LLM—as a minimum with the type of “harness” that LLMFunction, Chat Notebooks, and so forth. present. And we will then think about in impact “speaking in prompts” and for instance build up increasingly more ranges of prompts.

After all, we have already got a significant instance of one thing that at the very least in define is comparable: the best way through which over the previous few many years we’ve been capable of progressively assemble a complete tower of performance from the built-in features within the Wolfram Language. There’s an essential distinction, nevertheless: in defining built-in features we’re all the time engaged on “stable floor”, with exact (rigorously designed) computational specs for what we’re doing. In organising prompts for an LLM, strive as we’d to “write the prompts effectively” we’re in a way in the end “on the mercy of the LLM” and the way it chooses to deal with issues.

It feels in some methods just like the distinction between coping with engineering programs and with human organizations. In each circumstances one can arrange plans and procedures for what ought to occur. Within the engineering case, nevertheless, one can count on that (at the very least on the stage of particular person operations) the system will do precisely as one says. Within the human case—effectively, every kind of issues can occur. That isn’t to say that tremendous outcomes can’t be achieved by human organizations; historical past clearly exhibits they will.

However—as somebody who’s managed (human) organizations now for greater than 4 many years—I believe I can say the “rhythm” and practices of coping with human organizations differ in vital methods from these for technological ones. There’s nonetheless a particular sample of what to do, nevertheless it’s completely different, with a special method of going forwards and backwards to get outcomes, completely different approaches to “debugging”, and so forth.

How will it work with prompts? It’s one thing we nonetheless have to get used to. However for me there’s instantly one other helpful “comparable”. Again within the early 2000s we’d had a decade or two of expertise in creating what’s now Wolfram Language, with its exact formal specs, rigorously designed with consistency in thoughts. However then we began engaged on Wolfram|Alpha—the place now we needed a system that may simply cope with no matter enter somebody would possibly present. At first it was jarring. How might we develop any type of manageable system primarily based on boatloads of doubtless incompatible heuristics? It took a short time, however finally we realized that when every part is a heuristic there’s a sure sample and construction to that. And over time the event we do has turn out to be progressively extra systematic.

And so, I count on, will probably be with prompts. Within the Wolfram Immediate Repository right this moment, we’ve got a group of prompts that cowl a wide range of areas, however are nearly all “first stage”, within the sense that they rely solely on the bottom LLM, and never on different prompts. However over time I count on there’ll be complete hierarchies of prompts that develop (together with metaprompts for constructing prompts, and so forth. ) And certainly I received’t be stunned if on this method all types of “repeatable lumps of performance” are discovered, that truly will be carried out in a direct computational method, with out relying on LLMs. (And, sure, this may occasionally effectively undergo the type of “semantic grammar” construction that I’ve mentioned elsewhere.)

However as of now, we’re nonetheless simply on the level of first launching the Wolfram Immediate Repository, and starting the method of understanding the vary of issues—each helpful and enjoyable—that may be achieved with prompts. But it surely’s already clear that there’s going to be a really attention-grabbing world of prompts—and a progressive improvement of “immediate language” that in some methods will in all probability parallel (although at a significantly quicker price) the historic improvement of bizarre human languages.

It’s going to be a group effort—simply as it’s with bizarre human languages—to discover and construct out “immediate language”. And now that it’s launched, I’m excited to see how individuals will use our Immediate Repository, and simply what exceptional issues find yourself being doable via it.

[ad_2]