What Is ChatGPT Doing … and Why Does It Work?—Stephen Wolfram Writings

[ad_1]

“Wolfram|Alpha because the Technique to Carry Computational Data Superpowers to ChatGPT” »A dialogue concerning the historical past of neural nets »

It’s Simply Including One Phrase at a Time

That ChatGPT can robotically generate one thing that reads even superficially like human-written textual content is exceptional, and surprising. However how does it do it? And why does it work? My objective right here is to provide a tough define of what’s happening inside ChatGPT—after which to discover why it’s that it could actually accomplish that nicely in producing what we’d take into account to be significant textual content. I ought to say on the outset that I’m going to give attention to the massive image of what’s happening—and whereas I’ll point out some engineering particulars, I gained’t get deeply into them. (And the essence of what I’ll say applies simply as nicely to different present “massive language fashions” [LLMs] as to ChatGPT.)

The very first thing to clarify is that what ChatGPT is at all times basically making an attempt to do is to provide a “affordable continuation” of no matter textual content it’s obtained up to now, the place by “affordable” we imply “what one may anticipate somebody to jot down after seeing what folks have written on billions of webpages, and many others.”

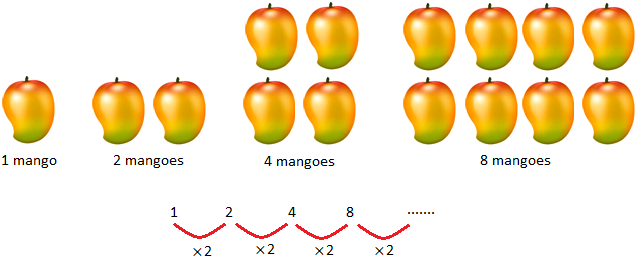

So let’s say we’ve obtained the textual content “The very best factor about AI is its capacity to”. Think about scanning billions of pages of human-written textual content (say on the net and in digitized books) and discovering all cases of this textual content—then seeing what phrase comes subsequent what fraction of the time. ChatGPT successfully does one thing like this, besides that (as I’ll clarify) it doesn’t have a look at literal textual content; it appears for issues that in a sure sense “match in which means”. However the finish result’s that it produces a ranked listing of phrases that may observe, along with “possibilities”:

And the exceptional factor is that when ChatGPT does one thing like write an essay what it’s basically doing is simply asking over and over “given the textual content up to now, what ought to the subsequent phrase be?”—and every time including a phrase. (Extra exactly, as I’ll clarify, it’s including a “token”, which could possibly be simply part of a phrase, which is why it could actually typically “make up new phrases”.)

However, OK, at every step it will get a listing of phrases with possibilities. However which one ought to it truly choose so as to add to the essay (or no matter) that it’s writing? One may assume it needs to be the “highest-ranked” phrase (i.e. the one to which the very best “chance” was assigned). However that is the place a little bit of voodoo begins to creep in. As a result of for some motive—that possibly at some point we’ll have a scientific-style understanding of—if we at all times choose the highest-ranked phrase, we’ll sometimes get a really “flat” essay, that by no means appears to “present any creativity” (and even typically repeats phrase for phrase). But when typically (at random) we choose lower-ranked phrases, we get a “extra attention-grabbing” essay.

The truth that there’s randomness right here signifies that if we use the identical immediate a number of occasions, we’re more likely to get totally different essays every time. And, in step with the thought of voodoo, there’s a selected so-called “temperature” parameter that determines how typically lower-ranked phrases will probably be used, and for essay technology, it seems {that a} “temperature” of 0.8 appears finest. (It’s price emphasizing that there’s no “idea” getting used right here; it’s only a matter of what’s been discovered to work in observe. And for instance the idea of “temperature” is there as a result of exponential distributions acquainted from statistical physics occur to be getting used, however there’s no “bodily” connection—at the very least as far as we all know.)

Earlier than we go on I ought to clarify that for functions of exposition I’m largely not going to make use of the full system that’s in ChatGPT; as an alternative I’ll often work with an easier GPT-2 system, which has the good characteristic that it’s sufficiently small to have the ability to run on a regular desktop laptop. And so for basically the whole lot I present I’ll be capable to embody express Wolfram Language code you can instantly run in your laptop. (Click on any image right here to repeat the code behind it.)

For instance, right here’s tips on how to get the desk of possibilities above. First, we’ve got to retrieve the underlying “language mannequin” neural internet:

In a while, we’ll look inside this neural internet, and speak about the way it works. However for now we will simply apply this “internet mannequin” as a black field to our textual content up to now, and ask for the highest 5 phrases by chance that the mannequin says ought to observe:

This takes that consequence and makes it into an express formatted “dataset”:

Right here’s what occurs if one repeatedly “applies the mannequin”—at every step including the phrase that has the highest chance (specified on this code because the “resolution” from the mannequin):

What occurs if one goes on longer? On this (“zero temperature”) case what comes out quickly will get fairly confused and repetitive:

However what if as an alternative of at all times choosing the “high” phrase one typically randomly picks “non-top” phrases (with the “randomness” similar to “temperature” 0.8)? Once more one can construct up textual content:

And each time one does this, totally different random decisions will probably be made, and the textual content will probably be totally different—as in these 5 examples:

It’s price mentioning that even at step one there are a variety of doable “subsequent phrases” to select from (at temperature 0.8), although their possibilities fall off fairly rapidly (and, sure, the straight line on this log-log plot corresponds to an n–1 “power-law” decay that’s very attribute of the final statistics of language):

So what occurs if one goes on longer? Right here’s a random instance. It’s higher than the top-word (zero temperature) case, however nonetheless at finest a bit bizarre:

This was executed with the easiest GPT-2 mannequin (from 2019). With the newer and greater GPT-3 fashions the outcomes are higher. Right here’s the top-word (zero temperature) textual content produced with the identical “immediate”, however with the largest GPT-3 mannequin:

And right here’s a random instance at “temperature 0.8”:

The place Do the Chances Come From?

OK, so ChatGPT at all times picks its subsequent phrase primarily based on possibilities. However the place do these possibilities come from? Let’s begin with an easier downside. Let’s take into account producing English textual content one letter (fairly than phrase) at a time. How can we work out what the chance for every letter needs to be?

A really minimal factor we might do is simply take a pattern of English textual content, and calculate how typically totally different letters happen in it. So, for instance, this counts letters within the Wikipedia article on “cats”:

And this does the identical factor for “canines”:

The outcomes are related, however not the identical (“o” is little doubt extra frequent within the “canines” article as a result of, in any case, it happens within the phrase “canine” itself). Nonetheless, if we take a big sufficient pattern of English textual content we will anticipate to ultimately get at the very least pretty constant outcomes:

Right here’s a pattern of what we get if we simply generate a sequence of letters with these possibilities:

We are able to break this into “phrases” by including in areas as in the event that they had been letters with a sure chance:

We are able to do a barely higher job of constructing “phrases” by forcing the distribution of “phrase lengths” to agree with what it’s in English:

We didn’t occur to get any “precise phrases” right here, however the outcomes are trying barely higher. To go additional, although, we have to do extra than simply choose every letter individually at random. And, for instance, we all know that if we’ve got a “q”, the subsequent letter principally needs to be “u”.

Right here’s a plot of the chances for letters on their very own:

And right here’s a plot that exhibits the chances of pairs of letters (“2-grams”) in typical English textual content. The doable first letters are proven throughout the web page, the second letters down the web page:

And we see right here, for instance, that the “q” column is clean (zero chance) besides on the “u” row. OK, so now as an alternative of producing our “phrases” a single letter at a time, let’s generate them taking a look at two letters at a time, utilizing these “2-gram” possibilities. Right here’s a pattern of the consequence—which occurs to incorporate a number of “precise phrases”:

With sufficiently a lot English textual content we will get fairly good estimates not only for possibilities of single letters or pairs of letters (2-grams), but in addition for longer runs of letters. And if we generate “random phrases” with progressively longer n-gram possibilities, we see that they get progressively “extra lifelike”:

However let’s now assume—roughly as ChatGPT does—that we’re coping with entire phrases, not letters. There are about 40,000 fairly generally used phrases in English. And by taking a look at a big corpus of English textual content (say a number of million books, with altogether a number of hundred billion phrases), we will get an estimate of how frequent every phrase is. And utilizing this we will begin producing “sentences”, through which every phrase is independently picked at random, with the identical chance that it seems within the corpus. Right here’s a pattern of what we get:

Not surprisingly, that is nonsense. So how can we do higher? Similar to with letters, we will begin making an allowance for not simply possibilities for single phrases however possibilities for pairs or longer n-grams of phrases. Doing this for pairs, listed below are 5 examples of what we get, in all instances ranging from the phrase “cat”:

It’s getting barely extra “wise trying”. And we’d think about that if we had been in a position to make use of sufficiently lengthy n-grams we’d principally “get a ChatGPT”—within the sense that we’d get one thing that might generate essay-length sequences of phrases with the “right total essay possibilities”. However right here’s the issue: there simply isn’t even near sufficient English textual content that’s ever been written to have the ability to deduce these possibilities.

In a crawl of the online there could be a number of hundred billion phrases; in books which were digitized there could be one other hundred billion phrases. However with 40,000 frequent phrases, even the variety of doable 2-grams is already 1.6 billion—and the variety of doable 3-grams is 60 trillion. So there’s no manner we will estimate the chances even for all of those from textual content that’s on the market. And by the point we get to “essay fragments” of 20 phrases, the variety of potentialities is bigger than the variety of particles within the universe, so in a way they might by no means all be written down.

So what can we do? The large thought is to make a mannequin that lets us estimate the chances with which sequences ought to happen—though we’ve by no means explicitly seen these sequences within the corpus of textual content we’ve checked out. And on the core of ChatGPT is exactly a so-called “massive language mannequin” (LLM) that’s been constructed to do a great job of estimating these possibilities.

What Is a Mannequin?

Say you wish to know (as Galileo did again within the late 1500s) how lengthy it’s going to take a cannon ball dropped from every flooring of the Tower of Pisa to hit the bottom. Nicely, you possibly can simply measure it in every case and make a desk of the outcomes. Or you possibly can do what’s the essence of theoretical science: make a mannequin that offers some sort of process for computing the reply fairly than simply measuring and remembering every case.

Let’s think about we’ve got (considerably idealized) knowledge for a way lengthy the cannon ball takes to fall from varied flooring:

How will we work out how lengthy it’s going to take to fall from a flooring we don’t explicitly have knowledge about? On this explicit case, we will use recognized legal guidelines of physics to work it out. However say all we’ve obtained is the info, and we don’t know what underlying legal guidelines govern it. Then we’d make a mathematical guess, like that maybe we should always use a straight line as a mannequin:

We might choose totally different straight traces. However that is the one which’s on common closest to the info we’re given. And from this straight line we will estimate the time to fall for any flooring.

How did we all know to attempt utilizing a straight line right here? At some degree we didn’t. It’s simply one thing that’s mathematically easy, and we’re used to the truth that a lot of knowledge we measure seems to be nicely match by mathematically easy issues. We might attempt one thing mathematically extra difficult—say a + b x + c x2—after which on this case we do higher:

Issues can go fairly incorrect, although. Like right here’s one of the best we will do with a + b/x + c sin(x):

It’s price understanding that there’s by no means a “model-less mannequin”. Any mannequin you employ has some explicit underlying construction—then a sure set of “knobs you may flip” (i.e. parameters you may set) to suit your knowledge. And within the case of ChatGPT, a lot of such “knobs” are used—truly, 175 billion of them.

However the exceptional factor is that the underlying construction of ChatGPT—with “simply” that many parameters—is adequate to make a mannequin that computes next-word possibilities “nicely sufficient” to provide us affordable essay-length items of textual content.

Fashions for Human-Like Duties

The instance we gave above includes making a mannequin for numerical knowledge that basically comes from easy physics—the place we’ve recognized for a number of centuries that “easy arithmetic applies”. However for ChatGPT we’ve got to make a mannequin of human-language textual content of the type produced by a human mind. And for one thing like that we don’t (at the very least but) have something like “easy arithmetic”. So what may a mannequin of it’s like?

Earlier than we speak about language, let’s speak about one other human-like job: recognizing photographs. And as a easy instance of this, let’s take into account photographs of digits (and, sure, this can be a traditional machine studying instance):

One factor we might do is get a bunch of pattern photographs for every digit:

Then to seek out out if a picture we’re given as enter corresponds to a selected digit we might simply do an express pixel-by-pixel comparability with the samples we’ve got. However as people we definitely appear to do one thing higher—as a result of we will nonetheless acknowledge digits, even once they’re for instance handwritten, and have all types of modifications and distortions:

Once we made a mannequin for our numerical knowledge above, we had been in a position to take a numerical worth x that we got, and simply compute a + b x for explicit a and b. So if we deal with the gray-level worth of every pixel right here as some variable xi is there some perform of all these variables that—when evaluated—tells us what digit the picture is of? It seems that it’s doable to assemble such a perform. Not surprisingly, it’s not notably easy, although. And a typical instance may contain maybe half one million mathematical operations.

However the finish result’s that if we feed the gathering of pixel values for a picture into this perform, out will come the quantity specifying which digit we’ve got a picture of. Later, we’ll speak about how such a perform will be constructed, and the thought of neural nets. However for now let’s deal with the perform as black field, the place we feed in photographs of, say, handwritten digits (as arrays of pixel values) and we get out the numbers these correspond to:

However what’s actually happening right here? Let’s say we progressively blur a digit. For a short time our perform nonetheless “acknowledges” it, right here as a “2”. However quickly it “loses it”, and begins giving the “incorrect” consequence:

However why do we are saying it’s the “incorrect” consequence? On this case, we all know we obtained all the photographs by blurring a “2”. But when our aim is to provide a mannequin of what people can do in recognizing photographs, the true query to ask is what a human would have executed if introduced with a kind of blurred photographs, with out figuring out the place it got here from.

And we’ve got a “good mannequin” if the outcomes we get from our perform sometimes agree with what a human would say. And the nontrivial scientific truth is that for an image-recognition job like this we now principally know tips on how to assemble features that do that.

Can we “mathematically show” that they work? Nicely, no. As a result of to do this we’d should have a mathematical idea of what we people are doing. Take the “2” picture and alter a number of pixels. We’d think about that with only some pixels “misplaced” we should always nonetheless take into account the picture a “2”. However how far ought to that go? It’s a query of human visible notion. And, sure, the reply would little doubt be totally different for bees or octopuses—and doubtlessly completely totally different for putative aliens.

Neural Nets

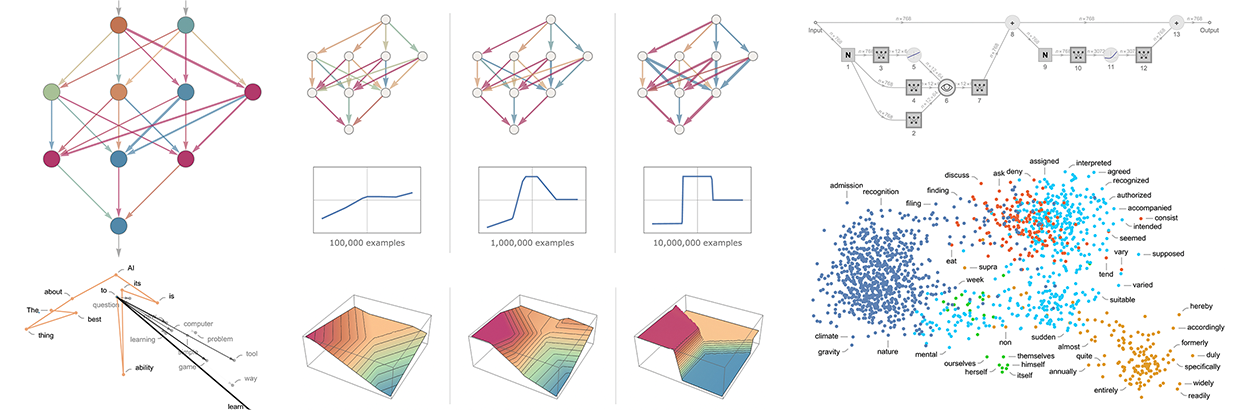

OK, so how do our typical fashions for duties like picture recognition truly work? The most well-liked—and profitable—present method makes use of neural nets. Invented—in a type remarkably near their use in the present day—within the Forties, neural nets will be considered easy idealizations of how brains appear to work.

In human brains there are about 100 billion neurons (nerve cells), every able to producing {an electrical} pulse as much as maybe a thousand occasions a second. The neurons are related in a sophisticated internet, with every neuron having tree-like branches permitting it to cross electrical indicators to maybe 1000’s of different neurons. And in a tough approximation, whether or not any given neuron produces {an electrical} pulse at a given second is dependent upon what pulses it’s obtained from different neurons—with totally different connections contributing with totally different “weights”.

Once we “see a picture” what’s occurring is that when photons of sunshine from the picture fall on (“photoreceptor”) cells behind our eyes they produce electrical indicators in nerve cells. These nerve cells are related to different nerve cells, and ultimately the indicators undergo an entire sequence of layers of neurons. And it’s on this course of that we “acknowledge” the picture, ultimately “forming the thought” that we’re “seeing a 2” (and possibly ultimately doing one thing like saying the phrase “two” out loud).

The “black-box” perform from the earlier part is a “mathematicized” model of such a neural internet. It occurs to have 11 layers (although solely 4 “core layers”):

There’s nothing notably “theoretically derived” about this neural internet; it’s simply one thing that—again in 1998—was constructed as a chunk of engineering, and located to work. (After all, that’s not a lot totally different from how we’d describe our brains as having been produced via the method of organic evolution.)

OK, however how does a neural internet like this “acknowledge issues”? The hot button is the notion of attractors. Think about we’ve obtained handwritten photographs of 1’s and a pair of’s:

We one way or the other need all of the 1’s to “be attracted to at least one place”, and all the two’s to “be attracted to a different place”. Or, put a unique manner, if a picture is one way or the other “nearer to being a 1” than to being a 2, we wish it to finish up within the “1 place” and vice versa.

As a simple analogy, let’s say we’ve got sure positions within the airplane, indicated by dots (in a real-life setting they could be positions of espresso retailers). Then we’d think about that ranging from any level on the airplane we’d at all times wish to find yourself on the closest dot (i.e. we’d at all times go to the closest espresso store). We are able to characterize this by dividing the airplane into areas (“attractor basins”) separated by idealized “watersheds”:

We are able to consider this as implementing a sort of “recognition job” through which we’re not doing one thing like figuring out what digit a given picture “appears most like”—however fairly we’re simply, fairly instantly, seeing what dot a given level is closest to. (The “Voronoi diagram” setup we’re displaying right here separates factors in 2D Euclidean house; the digit recognition job will be considered doing one thing very related—however in a 784-dimensional house fashioned from the grey ranges of all of the pixels in every picture.)

So how will we make a neural internet “do a recognition job”? Let’s take into account this quite simple case:

Our aim is to take an “enter” similar to a place {x,y}—after which to “acknowledge” it as whichever of the three factors it’s closest to. Or, in different phrases, we wish the neural internet to compute a perform of {x,y} like:

So how will we do that with a neural internet? In the end a neural internet is a related assortment of idealized “neurons”—often organized in layers—with a easy instance being:

Every “neuron” is successfully set as much as consider a easy numerical perform. And to “use” the community, we merely feed numbers (like our coordinates x and y) in on the high, then have neurons on every layer “consider their features” and feed the outcomes ahead via the community—ultimately producing the ultimate consequence on the backside:

Within the conventional (biologically impressed) setup every neuron successfully has a sure set of “incoming connections” from the neurons on the earlier layer, with every connection being assigned a sure “weight” (which generally is a optimistic or adverse quantity). The worth of a given neuron is decided by multiplying the values of “earlier neurons” by their corresponding weights, then including these up and including a relentless—and eventually making use of a “thresholding” (or “activation”) perform. In mathematical phrases, if a neuron has inputs

Computing w . x + b is only a matter of matrix multiplication and addition. The “activation perform” f introduces nonlinearity (and finally is what results in nontrivial conduct). Varied activation features generally get used; right here we’ll simply use Ramp (or ReLU):

For every job we wish the neural internet to carry out (or, equivalently, for every total perform we wish it to guage) we’ll have totally different decisions of weights. (And—as we’ll talk about later—these weights are usually decided by “coaching” the neural internet utilizing machine studying from examples of the outputs we wish.)

In the end, each neural internet simply corresponds to some total mathematical perform—although it might be messy to jot down out. For the instance above, it will be:

The neural internet of ChatGPT additionally simply corresponds to a mathematical perform like this—however successfully with billions of phrases.

However let’s return to particular person neurons. Listed below are some examples of the features a neuron with two inputs (representing coordinates x and y) can compute with varied decisions of weights and constants (and Ramp as activation perform):

However what concerning the bigger community from above? Nicely, right here’s what it computes:

It’s not fairly “proper”, but it surely’s near the “nearest level” perform we confirmed above.

Let’s see what occurs with another neural nets. In every case, as we’ll clarify later, we’re utilizing machine studying to seek out your best option of weights. Then we’re displaying right here what the neural internet with these weights computes:

Greater networks usually do higher at approximating the perform we’re aiming for. And within the “center of every attractor basin” we sometimes get precisely the reply we wish. However on the boundaries—the place the neural internet “has a tough time making up its thoughts”—issues will be messier.

With this straightforward mathematical-style “recognition job” it’s clear what the “proper reply” is. However in the issue of recognizing handwritten digits, it’s not so clear. What if somebody wrote a “2” so badly it seemed like a “7”, and many others.? Nonetheless, we will ask how a neural internet distinguishes digits—and this offers a sign:

Can we are saying “mathematically” how the community makes its distinctions? Probably not. It’s simply “doing what the neural internet does”. Nevertheless it seems that that usually appears to agree pretty nicely with the distinctions we people make.

Let’s take a extra elaborate instance. Let’s say we’ve got photographs of cats and canines. And we’ve got a neural internet that’s been skilled to differentiate them. Right here’s what it’d do on some examples:

Now it’s even much less clear what the “proper reply” is. What a couple of canine wearing a cat go well with? And so forth. No matter enter it’s given the neural internet will generate a solution, and in a manner fairly in step with how people may. As I’ve stated above, that’s not a truth we will “derive from first rules”. It’s simply one thing that’s empirically been discovered to be true, at the very least in sure domains. Nevertheless it’s a key motive why neural nets are helpful: that they one way or the other seize a “human-like” manner of doing issues.

Present your self an image of a cat, and ask “Why is {that a} cat?”. Perhaps you’d begin saying “Nicely, I see its pointy ears, and many others.” Nevertheless it’s not very simple to clarify the way you acknowledged the picture as a cat. It’s simply that one way or the other your mind figured that out. However for a mind there’s no manner (at the very least but) to “go inside” and see the way it figured it out. What about for an (synthetic) neural internet? Nicely, it’s simple to see what every “neuron” does while you present an image of a cat. However even to get a fundamental visualization is often very troublesome.

Within the closing internet that we used for the “nearest level” downside above there are 17 neurons. Within the internet for recognizing handwritten digits there are 2190. And within the internet we’re utilizing to acknowledge cats and canines there are 60,650. Usually it will be fairly troublesome to visualise what quantities to 60,650-dimensional house. However as a result of this can be a community set as much as cope with photographs, lots of its layers of neurons are organized into arrays, just like the arrays of pixels it’s taking a look at.

And if we take a typical cat picture

then we will characterize the states of neurons on the first layer by a set of derived photographs—lots of which we will readily interpret as being issues like “the cat with out its background”, or “the define of the cat”:

By the tenth layer it’s tougher to interpret what’s happening:

However typically we’d say that the neural internet is “choosing out sure options” (possibly pointy ears are amongst them), and utilizing these to find out what the picture is of. However are these options ones for which we’ve got names—like “pointy ears”? Largely not.

Are our brains utilizing related options? Largely we don’t know. Nevertheless it’s notable that the primary few layers of a neural internet just like the one we’re displaying right here appear to pick elements of photographs (like edges of objects) that appear to be just like ones we all know are picked out by the primary degree of visible processing in brains.

However let’s say we wish a “idea of cat recognition” in neural nets. We are able to say: “Look, this explicit internet does it”—and instantly that offers us some sense of “how exhausting an issue” it’s (and, for instance, what number of neurons or layers could be wanted). However at the very least as of now we don’t have a strategy to “give a story description” of what the community is doing. And possibly that’s as a result of it actually is computationally irreducible, and there’s no common strategy to discover what it does besides by explicitly tracing every step. Or possibly it’s simply that we haven’t “found out the science”, and recognized the “pure legal guidelines” that permit us to summarize what’s happening.

We’ll encounter the identical sorts of points after we speak about producing language with ChatGPT. And once more it’s not clear whether or not there are methods to “summarize what it’s doing”. However the richness and element of language (and our expertise with it) might permit us to get additional than with photographs.

Machine Studying, and the Coaching of Neural Nets

We’ve been speaking up to now about neural nets that “already know” tips on how to do explicit duties. However what makes neural nets so helpful (presumably additionally in brains) is that not solely can they in precept do all types of duties, however they are often incrementally “skilled from examples” to do these duties.

Once we make a neural internet to differentiate cats from canines we don’t successfully have to jot down a program that (say) explicitly finds whiskers; as an alternative we simply present a lot of examples of what’s a cat and what’s a canine, after which have the community “machine study” from these tips on how to distinguish them.

And the purpose is that the skilled community “generalizes” from the actual examples it’s proven. Simply as we’ve seen above, it isn’t merely that the community acknowledges the actual pixel sample of an instance cat picture it was proven; fairly it’s that the neural internet one way or the other manages to differentiate photographs on the premise of what we take into account to be some sort of “common catness”.

So how does neural internet coaching truly work? Basically what we’re at all times making an attempt to do is to seek out weights that make the neural internet efficiently reproduce the examples we’ve given. After which we’re counting on the neural internet to “interpolate” (or “generalize”) “between” these examples in a “affordable” manner.

Let’s have a look at an issue even easier than the nearest-point one above. Let’s simply attempt to get a neural internet to study the perform:

For this job, we’ll want a community that has only one enter and one output, like:

However what weights, and many others. ought to we be utilizing? With each doable set of weights the neural internet will compute some perform. And, for instance, right here’s what it does with a number of randomly chosen units of weights:

And, sure, we will plainly see that in none of those instances does it get even near reproducing the perform we wish. So how do we discover weights that can reproduce the perform?

The essential thought is to produce a lot of “enter → output” examples to “study from”—after which to attempt to discover weights that can reproduce these examples. Right here’s the results of doing that with progressively extra examples:

At every stage on this “coaching” the weights within the community are progressively adjusted—and we see that ultimately we get a community that efficiently reproduces the perform we wish. So how will we regulate the weights? The essential thought is at every stage to see “how far-off we’re” from getting the perform we wish—after which to replace the weights in such a manner as to get nearer.

To search out out “how far-off we’re” we compute what’s often referred to as a “loss perform” (or typically “price perform”). Right here we’re utilizing a easy (L2) loss perform that’s simply the sum of the squares of the variations between the values we get, and the true values. And what we see is that as our coaching course of progresses, the loss perform progressively decreases (following a sure “studying curve” that’s totally different for various duties)—till we attain a degree the place the community (at the very least to a great approximation) efficiently reproduces the perform we wish:

Alright, so the final important piece to clarify is how the weights are adjusted to scale back the loss perform. As we’ve stated, the loss perform offers us a “distance” between the values we’ve obtained, and the true values. However the “values we’ve obtained” are decided at every stage by the present model of neural internet—and by the weights in it. However now think about that the weights are variables—say wi. We wish to learn the way to regulate the values of those variables to reduce the loss that is dependent upon them.

For instance, think about (in an unimaginable simplification of typical neural nets utilized in observe) that we’ve got simply two weights w1 and w2. Then we’d have a loss that as a perform of w1 and w2 appears like this:

Numerical evaluation offers a wide range of methods for locating the minimal in instances like this. However a typical method is simply to progressively observe the trail of steepest descent from no matter earlier w1, w2 we had:

Like water flowing down a mountain, all that’s assured is that this process will find yourself at some native minimal of the floor (“a mountain lake”); it’d nicely not attain the final word international minimal.

It’s not apparent that it will be possible to seek out the trail of the steepest descent on the “weight panorama”. However calculus involves the rescue. As we talked about above, one can at all times consider a neural internet as computing a mathematical perform—that is dependent upon its inputs, and its weights. However now take into account differentiating with respect to those weights. It seems that the chain rule of calculus in impact lets us “unravel” the operations executed by successive layers within the neural internet. And the result’s that we will—at the very least in some native approximation—“invert” the operation of the neural internet, and progressively discover weights that decrease the loss related to the output.

The image above exhibits the sort of minimization we’d have to do within the unrealistically easy case of simply 2 weights. Nevertheless it seems that even with many extra weights (ChatGPT makes use of 175 billion) it’s nonetheless doable to do the minimization, at the very least to some degree of approximation. And actually the massive breakthrough in “deep studying” that occurred round 2011 was related to the invention that in some sense it may be simpler to do (at the very least approximate) minimization when there are many weights concerned than when there are pretty few.

In different phrases—considerably counterintuitively—it may be simpler to unravel extra difficult issues with neural nets than easier ones. And the tough motive for this appears to be that when one has a variety of “weight variables” one has a high-dimensional house with “a lot of totally different instructions” that may lead one to the minimal—whereas with fewer variables it’s simpler to finish up getting caught in a neighborhood minimal (“mountain lake”) from which there’s no “course to get out”.

It’s price mentioning that in typical instances there are lots of totally different collections of weights that can all give neural nets which have just about the identical efficiency. And often in sensible neural internet coaching there are many random decisions made—that result in “different-but-equivalent options”, like these:

However every such “totally different answer” may have at the very least barely totally different conduct. And if we ask, say, for an “extrapolation” outdoors the area the place we gave coaching examples, we will get dramatically totally different outcomes:

However which of those is “proper”? There’s actually no strategy to say. They’re all “in step with the noticed knowledge”. However all of them correspond to totally different “innate” methods to “take into consideration” what to do “outdoors the field”. And a few could seem “extra affordable” to us people than others.

The Apply and Lore of Neural Internet Coaching

Significantly over the previous decade, there’ve been many advances within the artwork of coaching neural nets. And, sure, it’s principally an artwork. Generally—particularly looking back—one can see at the very least a glimmer of a “scientific rationalization” for one thing that’s being executed. However largely issues have been found by trial and error, including concepts and methods which have progressively constructed a big lore about tips on how to work with neural nets.

There are a number of key elements. First, there’s the matter of what structure of neural internet one ought to use for a selected job. Then there’s the essential problem of how one’s going to get the info on which to coach the neural internet. And more and more one isn’t coping with coaching a internet from scratch: as an alternative a brand new internet can both instantly incorporate one other already-trained internet, or at the very least can use that internet to generate extra coaching examples for itself.

One might need thought that for each explicit sort of job one would want a unique structure of neural internet. However what’s been discovered is that the identical structure typically appears to work even for apparently fairly totally different duties. At some degree this reminds one of many thought of common computation (and my Precept of Computational Equivalence), however, as I’ll talk about later, I believe it’s extra a mirrored image of the truth that the duties we’re sometimes making an attempt to get neural nets to do are “human-like” ones—and neural nets can seize fairly common “human-like processes”.

In earlier days of neural nets, there tended to be the concept one ought to “make the neural internet do as little as doable”. For instance, in changing speech to textual content it was thought that one ought to first analyze the audio of the speech, break it into phonemes, and many others. However what was discovered is that—at the very least for “human-like duties”—it’s often higher simply to attempt to prepare the neural internet on the “end-to-end downside”, letting it “uncover” the required intermediate options, encodings, and many others. for itself.

There was additionally the concept one ought to introduce difficult particular person parts into the neural internet, to let it in impact “explicitly implement explicit algorithmic concepts”. However as soon as once more, this has largely turned out to not be worthwhile; as an alternative, it’s higher simply to cope with quite simple parts and allow them to “arrange themselves” (albeit often in methods we will’t perceive) to attain (presumably) the equal of these algorithmic concepts.

That’s to not say that there aren’t any “structuring concepts” which can be related for neural nets. Thus, for instance, having 2D arrays of neurons with native connections appears at the very least very helpful within the early levels of processing photographs. And having patterns of connectivity that think about “trying again in sequences” appears helpful—as we’ll see later—in coping with issues like human language, for instance in ChatGPT.

However an vital characteristic of neural nets is that—like computer systems typically—they’re finally simply coping with knowledge. And present neural nets—with present approaches to neural internet coaching—particularly cope with arrays of numbers. However in the midst of processing, these arrays will be utterly rearranged and reshaped. And for example, the community we used for figuring out digits above begins with a 2D “image-like” array, rapidly “thickening” to many channels, however then “concentrating down” right into a 1D array that can finally include parts representing the totally different doable output digits:

However, OK, how can one inform how huge a neural internet one will want for a selected job? It’s one thing of an artwork. At some degree the important thing factor is to know “how exhausting the duty is”. However for human-like duties that’s sometimes very exhausting to estimate. Sure, there could also be a scientific strategy to do the duty very “mechanically” by laptop. Nevertheless it’s exhausting to know if there are what one may consider as methods or shortcuts that permit one to do the duty at the very least at a “human-like degree” vastly extra simply. It would take enumerating a large sport tree to “mechanically” play a sure sport; however there could be a a lot simpler (“heuristic”) strategy to obtain “human-level play”.

When one’s coping with tiny neural nets and easy duties one can typically explicitly see that one “can’t get there from right here”. For instance, right here’s one of the best one appears to have the ability to do on the duty from the earlier part with a number of small neural nets:

And what we see is that if the web is just too small, it simply can’t reproduce the perform we wish. However above some dimension, it has no downside—at the very least if one trains it for lengthy sufficient, with sufficient examples. And, by the best way, these photos illustrate a chunk of neural internet lore: that one can typically get away with a smaller community if there’s a “squeeze” within the center that forces the whole lot to undergo a smaller intermediate variety of neurons. (It’s additionally price mentioning that “no-intermediate-layer”—or so-called “perceptron”—networks can solely study basically linear features—however as quickly as there’s even one intermediate layer it’s at all times in precept doable to approximate any perform arbitrarily nicely, at the very least if one has sufficient neurons, although to make it feasibly trainable one sometimes has some sort of regularization or normalization.)

OK, so let’s say one’s settled on a sure neural internet structure. Now there’s the problem of getting knowledge to coach the community with. And most of the sensible challenges round neural nets—and machine studying typically—heart on buying or getting ready the required coaching knowledge. In lots of instances (“supervised studying”) one needs to get express examples of inputs and the outputs one is anticipating from them. Thus, for instance, one may need photographs tagged by what’s in them, or another attribute. And possibly one should explicitly undergo—often with nice effort—and do the tagging. However fairly often it seems to be doable to piggyback on one thing that’s already been executed, or use it as some sort of proxy. And so, for instance, one may use alt tags which were offered for photographs on the net. Or, in a unique area, one may use closed captions which were created for movies. Or—for language translation coaching—one may use parallel variations of webpages or different paperwork that exist in numerous languages.

How a lot knowledge do you might want to present a neural internet to coach it for a selected job? Once more, it’s exhausting to estimate from first rules. Actually the necessities will be dramatically lowered by utilizing “switch studying” to “switch in” issues like lists of vital options which have already been realized in one other community. However usually neural nets have to “see a variety of examples” to coach nicely. And at the very least for some duties it’s an vital piece of neural internet lore that the examples will be extremely repetitive. And certainly it’s a regular technique to only present a neural internet all of the examples one has, over and over. In every of those “coaching rounds” (or “epochs”) the neural internet will probably be in at the very least a barely totally different state, and one way or the other “reminding it” of a selected instance is beneficial in getting it to “keep in mind that instance”. (And, sure, maybe that is analogous to the usefulness of repetition in human memorization.)

However typically simply repeating the identical instance over and over isn’t sufficient. It’s additionally obligatory to indicate the neural internet variations of the instance. And it’s a characteristic of neural internet lore that these “knowledge augmentation” variations don’t should be refined to be helpful. Simply barely modifying photographs with fundamental picture processing could make them basically “nearly as good as new” for neural internet coaching. And, equally, when one’s run out of precise video, and many others. for coaching self-driving vehicles, one can go on and simply get knowledge from operating simulations in a mannequin videogame-like surroundings with out all of the element of precise real-world scenes.

How about one thing like ChatGPT? Nicely, it has the good characteristic that it could actually do “unsupervised studying”, making it a lot simpler to get it examples to coach from. Recall that the fundamental job for ChatGPT is to determine tips on how to proceed a chunk of textual content that it’s been given. So to get it “coaching examples” all one has to do is get a chunk of textual content, and masks out the tip of it, after which use this because the “enter to coach from”—with the “output” being the whole, unmasked piece of textual content. We’ll talk about this extra later, however the principle level is that—in contrast to, say, for studying what’s in photographs—there’s no “express tagging” wanted; ChatGPT can in impact simply study instantly from no matter examples of textual content it’s given.

OK, so what concerning the precise studying course of in a neural internet? In the long run it’s all about figuring out what weights will finest seize the coaching examples which were given. And there are all types of detailed decisions and “hyperparameter settings” (so referred to as as a result of the weights will be considered “parameters”) that can be utilized to tweak how that is executed. There are totally different decisions of loss perform (sum of squares, sum of absolute values, and many others.). There are alternative ways to do loss minimization (how far in weight house to maneuver at every step, and many others.). After which there are questions like how huge a “batch” of examples to indicate to get every successive estimate of the loss one’s making an attempt to reduce. And, sure, one can apply machine studying (as we do, for instance, in Wolfram Language) to automate machine studying—and to robotically set issues like hyperparameters.

However ultimately the entire course of of coaching will be characterised by seeing how the loss progressively decreases (as on this Wolfram Language progress monitor for a small coaching):

And what one sometimes sees is that the loss decreases for some time, however ultimately flattens out at some fixed worth. If that worth is small enough, then the coaching will be thought of profitable; in any other case it’s most likely an indication one ought to attempt altering the community structure.

Can one inform how lengthy it ought to take for the “studying curve” to flatten out? Like for therefore many different issues, there appear to be approximate power-law scaling relationships that rely on the dimensions of neural internet and quantity of knowledge one’s utilizing. However the common conclusion is that coaching a neural internet is difficult—and takes a variety of computational effort. And as a sensible matter, the overwhelming majority of that effort is spent doing operations on arrays of numbers, which is what GPUs are good at—which is why neural internet coaching is often restricted by the provision of GPUs.

Sooner or later, will there be basically higher methods to coach neural nets—or usually do what neural nets do? Nearly definitely, I believe. The basic thought of neural nets is to create a versatile “computing cloth” out of a lot of easy (basically equivalent) parts—and to have this “cloth” be one that may be incrementally modified to study from examples. In present neural nets, one’s basically utilizing the concepts of calculus—utilized to actual numbers—to do this incremental modification. Nevertheless it’s more and more clear that having high-precision numbers doesn’t matter; 8 bits or much less could be sufficient even with present strategies.

With computational methods like mobile automata that principally function in parallel on many particular person bits it’s by no means been clear tips on how to do this type of incremental modification, however there’s no motive to assume it isn’t doable. And actually, very similar to with the “deep-learning breakthrough of 2012” it might be that such incremental modification will successfully be simpler in additional difficult instances than in easy ones.

Neural nets—maybe a bit like brains—are set as much as have an basically fastened community of neurons, with what’s modified being the energy (“weight”) of connections between them. (Maybe in at the very least younger brains vital numbers of wholly new connections also can develop.) However whereas this could be a handy setup for biology, it’s under no circumstances clear that it’s even near one of the best ways to attain the performance we want. And one thing that includes the equal of progressive community rewriting (maybe paying homage to our Physics Venture) may nicely finally be higher.

However even throughout the framework of present neural nets there’s at the moment an important limitation: neural internet coaching because it’s now executed is basically sequential, with the consequences of every batch of examples being propagated again to replace the weights. And certainly with present laptop {hardware}—even making an allowance for GPUs—most of a neural internet is “idle” more often than not throughout coaching, with only one half at a time being up to date. And in a way it’s because our present computer systems are likely to have reminiscence that’s separate from their CPUs (or GPUs). However in brains it’s presumably totally different—with each “reminiscence factor” (i.e. neuron) additionally being a doubtlessly lively computational factor. And if we might arrange our future laptop {hardware} this manner it’d turn out to be doable to do coaching far more effectively.

“Certainly a Community That’s Huge Sufficient Can Do Something!”

The capabilities of one thing like ChatGPT appear so spectacular that one may think that if one might simply “preserve going” and prepare bigger and bigger neural networks, then they’d ultimately be capable to “do the whole lot”. And if one’s involved with issues which can be readily accessible to speedy human considering, it’s fairly doable that that is the case. However the lesson of the previous a number of hundred years of science is that there are issues that may be found out by formal processes, however aren’t readily accessible to speedy human considering.

Nontrivial arithmetic is one huge instance. However the common case is absolutely computation. And finally the problem is the phenomenon of computational irreducibility. There are some computations which one may assume would take many steps to do, however which might in actual fact be “lowered” to one thing fairly speedy. However the discovery of computational irreducibility implies that this doesn’t at all times work. And as an alternative there are processes—most likely just like the one beneath—the place to work out what occurs inevitably requires basically tracing every computational step:

The sorts of issues that we usually do with our brains are presumably particularly chosen to keep away from computational irreducibility. It takes particular effort to do math in a single’s mind. And it’s in observe largely unattainable to “assume via” the steps within the operation of any nontrivial program simply in a single’s mind.

However in fact for that we’ve got computer systems. And with computer systems we will readily do lengthy, computationally irreducible issues. And the important thing level is that there’s typically no shortcut for these.

Sure, we might memorize a lot of particular examples of what occurs in some explicit computational system. And possibly we might even see some (“computationally reducible”) patterns that might permit us to do some generalization. However the level is that computational irreducibility signifies that we will by no means assure that the surprising gained’t occur—and it’s solely by explicitly doing the computation you can inform what truly occurs in any explicit case.

And ultimately there’s only a elementary pressure between learnability and computational irreducibility. Studying includes in impact compressing knowledge by leveraging regularities. However computational irreducibility implies that finally there’s a restrict to what regularities there could also be.

As a sensible matter, one can think about constructing little computational gadgets—like mobile automata or Turing machines—into trainable methods like neural nets. And certainly such gadgets can function good “instruments” for the neural internet—like Wolfram|Alpha generally is a good instrument for ChatGPT. However computational irreducibility implies that one can’t anticipate to “get inside” these gadgets and have them study.

Or put one other manner, there’s an final tradeoff between functionality and trainability: the extra you need a system to make “true use” of its computational capabilities, the extra it’s going to indicate computational irreducibility, and the much less it’s going to be trainable. And the extra it’s basically trainable, the much less it’s going to have the ability to do refined computation.

(For ChatGPT because it at the moment is, the scenario is definitely far more excessive, as a result of the neural internet used to generate every token of output is a pure “feed-forward” community, with out loops, and due to this fact has no capacity to do any sort of computation with nontrivial “management circulation”.)

After all, one may ponder whether it’s truly vital to have the ability to do irreducible computations. And certainly for a lot of human historical past it wasn’t notably vital. However our trendy technological world has been constructed on engineering that makes use of at the very least mathematical computations—and more and more additionally extra common computations. And if we have a look at the pure world, it’s filled with irreducible computation—that we’re slowly understanding tips on how to emulate and use for our technological functions.

Sure, a neural internet can definitely discover the sorts of regularities within the pure world that we’d additionally readily discover with “unaided human considering”. But when we wish to work out issues which can be within the purview of mathematical or computational science the neural internet isn’t going to have the ability to do it—except it successfully “makes use of as a instrument” an “extraordinary” computational system.

However there’s one thing doubtlessly complicated about all of this. Previously there have been loads of duties—together with writing essays—that we’ve assumed had been one way or the other “basically too exhausting” for computer systems. And now that we see them executed by the likes of ChatGPT we are likely to all of the sudden assume that computer systems will need to have turn out to be vastly extra highly effective—specifically surpassing issues they had been already principally in a position to do (like progressively computing the conduct of computational methods like mobile automata).

However this isn’t the precise conclusion to attract. Computationally irreducible processes are nonetheless computationally irreducible, and are nonetheless basically exhausting for computer systems—even when computer systems can readily compute their particular person steps. And as an alternative what we should always conclude is that duties—like writing essays—that we people might do, however we didn’t assume computer systems might do, are literally in some sense computationally simpler than we thought.

In different phrases, the explanation a neural internet will be profitable in writing an essay is as a result of writing an essay seems to be a “computationally shallower” downside than we thought. And in a way this takes us nearer to “having a idea” of how we people handle to do issues like writing essays, or typically cope with language.

If you happen to had a large enough neural internet then, sure, you may be capable to do no matter people can readily do. However you wouldn’t seize what the pure world typically can do—or that the instruments that we’ve customary from the pure world can do. And it’s using these instruments—each sensible and conceptual—which have allowed us in current centuries to transcend the boundaries of what’s accessible to “pure unaided human thought”, and seize for human functions extra of what’s on the market within the bodily and computational universe.

The Idea of Embeddings

Neural nets—at the very least as they’re at the moment arrange—are basically primarily based on numbers. So if we’re going to to make use of them to work on one thing like textual content we’ll want a strategy to characterize our textual content with numbers. And definitely we might begin (basically as ChatGPT does) by simply assigning a quantity to each phrase within the dictionary. However there’s an vital thought—that’s for instance central to ChatGPT—that goes past that. And it’s the thought of “embeddings”. One can consider an embedding as a strategy to attempt to characterize the “essence” of one thing by an array of numbers—with the property that “close by issues” are represented by close by numbers.

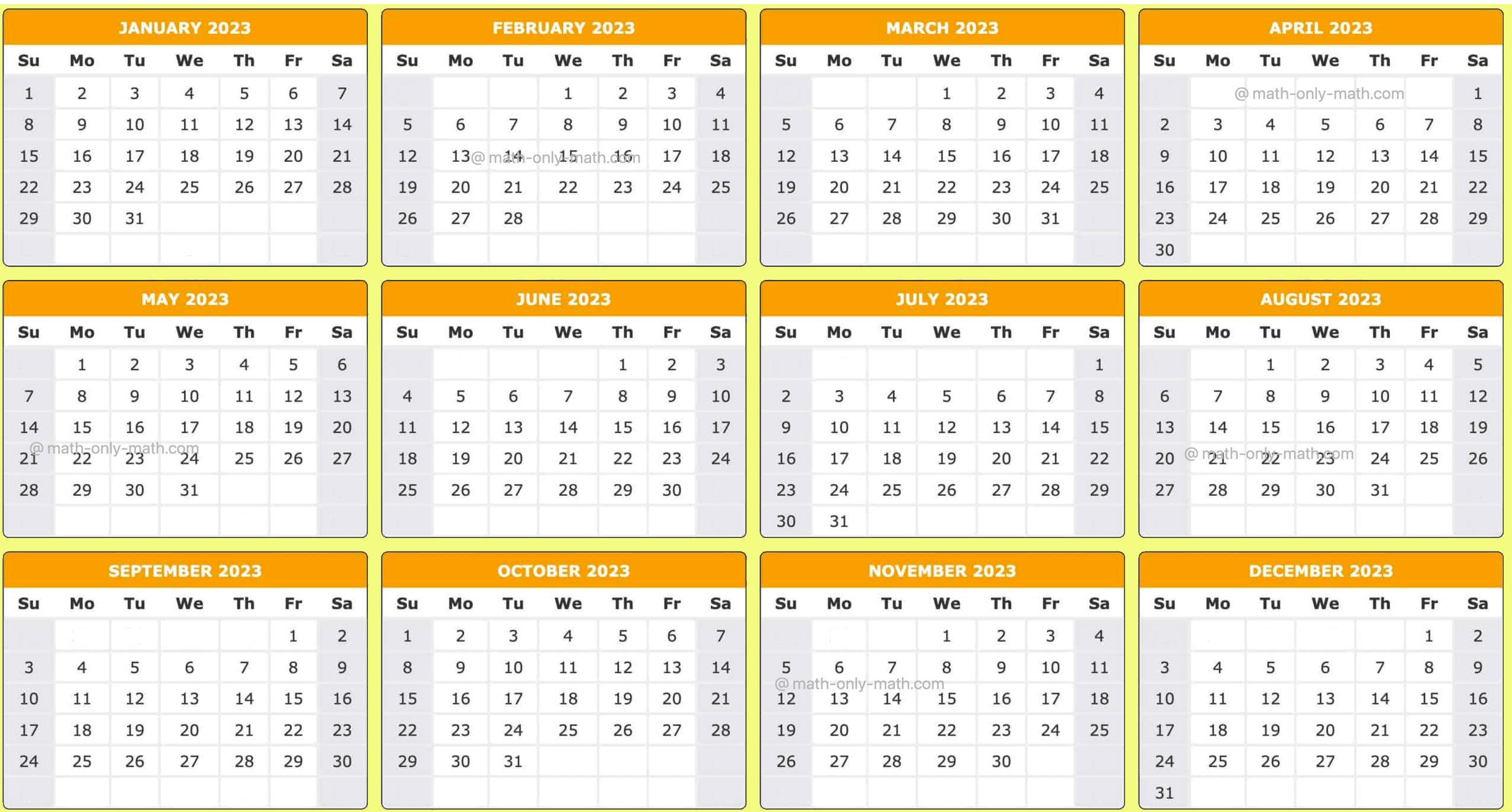

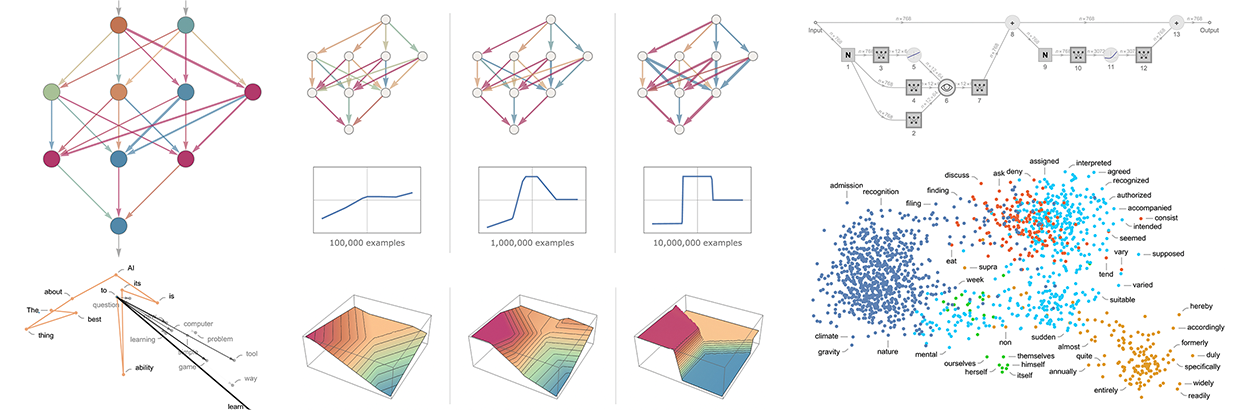

And so, for instance, we will consider a phrase embedding as making an attempt to lay out phrases in a sort of “which means house” through which phrases which can be one way or the other “close by in which means” seem close by within the embedding. The precise embeddings which can be used—say in ChatGPT—are likely to contain massive lists of numbers. But when we challenge all the way down to 2D, we will present examples of how phrases are laid out by the embedding:

And, sure, what we see does remarkably nicely in capturing typical on a regular basis impressions. However how can we assemble such an embedding? Roughly the thought is to have a look at massive quantities of textual content (right here 5 billion phrases from the online) after which see “how related” the “environments” are through which totally different phrases seem. So, for instance, “alligator” and “crocodile” will typically seem virtually interchangeably in in any other case related sentences, and meaning they’ll be positioned close by within the embedding. However “turnip” and “eagle” gained’t have a tendency to look in in any other case related sentences, in order that they’ll be positioned far aside within the embedding.

However how does one truly implement one thing like this utilizing neural nets? Let’s begin by speaking about embeddings not for phrases, however for photographs. We wish to discover some strategy to characterize photographs by lists of numbers in such a manner that “photographs we take into account related” are assigned related lists of numbers.

How will we inform if we should always “take into account photographs related”? Nicely, if our photographs are, say, of handwritten digits we’d “take into account two photographs related” if they’re of the identical digit. Earlier we mentioned a neural internet that was skilled to acknowledge handwritten digits. And we will consider this neural internet as being arrange in order that in its closing output it places photographs into 10 totally different bins, one for every digit.

However what if we “intercept” what’s happening contained in the neural internet earlier than the ultimate “it’s a ‘4’” resolution is made? We’d anticipate that contained in the neural internet there are numbers that characterize photographs as being “largely 4-like however a bit 2-like” or some such. And the thought is to select up such numbers to make use of as parts in an embedding.

So right here’s the idea. Reasonably than instantly making an attempt to characterize “what picture is close to what different picture”, we as an alternative take into account a well-defined job (on this case digit recognition) for which we will get express coaching knowledge—then use the truth that in doing this job the neural internet implicitly has to make what quantity to “nearness selections”. So as an alternative of us ever explicitly having to speak about “nearness of photographs” we’re simply speaking concerning the concrete query of what digit a picture represents, after which we’re “leaving it to the neural internet” to implicitly decide what that suggests about “nearness of photographs”.

So how in additional element does this work for the digit recognition community? We are able to consider the community as consisting of 11 successive layers, that we’d summarize iconically like this (with activation features proven as separate layers):

Initially we’re feeding into the primary layer precise photographs, represented by 2D arrays of pixel values. And on the finish—from the final layer—we’re getting out an array of 10 values, which we will consider saying “how sure” the community is that the picture corresponds to every of the digits 0 via 9.

Feed within the picture ![]() and the values of the neurons in that final layer are:

and the values of the neurons in that final layer are:

In different phrases, the neural internet is by this level “extremely sure” that this picture is a 4—and to truly get the output “4” we simply have to pick the place of the neuron with the biggest worth.

However what if we glance one step earlier? The final operation within the community is a so-called softmax which tries to “drive certainty”. However earlier than that’s been utilized the values of the neurons are:

The neuron representing “4” nonetheless has the very best numerical worth. However there’s additionally data within the values of the opposite neurons. And we will anticipate that this listing of numbers can in a way be used to characterize the “essence” of the picture—and thus to supply one thing we will use as an embedding. And so, for instance, every of the 4’s right here has a barely totally different “signature” (or “characteristic embedding”)—all very totally different from the 8’s:

Right here we’re basically utilizing 10 numbers to characterize our photographs. Nevertheless it’s typically higher to make use of far more than that. And for instance in our digit recognition community we will get an array of 500 numbers by tapping into the previous layer. And that is most likely an affordable array to make use of as an “picture embedding”.

If we wish to make an express visualization of “picture house” for handwritten digits we have to “cut back the dimension”, successfully by projecting the 500-dimensional vector we’ve obtained into, say, 3D house:

We’ve simply talked about making a characterization (and thus embedding) for photographs primarily based successfully on figuring out the similarity of photographs by figuring out whether or not (based on our coaching set) they correspond to the identical handwritten digit. And we will do the identical factor far more usually for photographs if we’ve got a coaching set that identifies, say, which of 5000 frequent kinds of object (cat, canine, chair, …) every picture is of. And on this manner we will make a picture embedding that’s “anchored” by our identification of frequent objects, however then “generalizes round that” based on the conduct of the neural internet. And the purpose is that insofar as that conduct aligns with how we people understand and interpret photographs, this can find yourself being an embedding that “appears proper to us”, and is beneficial in observe in doing “human-judgement-like” duties.

OK, so how will we observe the identical sort of method to seek out embeddings for phrases? The hot button is to begin from a job about phrases for which we will readily do coaching. And the usual such job is “phrase prediction”. Think about we’re given “the ___ cat”. Primarily based on a big corpus of textual content (say, the textual content content material of the online), what are the chances for various phrases that may “fill within the clean”? Or, alternatively, given “___ black ___” what are the chances for various “flanking phrases”?

How will we set this downside up for a neural internet? In the end we’ve got to formulate the whole lot when it comes to numbers. And a technique to do that is simply to assign a novel quantity to every of the 50,000 or so frequent phrases in English. So, for instance, “the” could be 914, and “ cat” (with an area earlier than it) could be 3542. (And these are the precise numbers utilized by GPT-2.) So for the “the ___ cat” downside, our enter could be {914, 3542}. What ought to the output be like? Nicely, it needs to be a listing of fifty,000 or so numbers that successfully give the chances for every of the doable “fill-in” phrases. And as soon as once more, to seek out an embedding, we wish to “intercept” the “insides” of the neural internet simply earlier than it “reaches its conclusion”—after which choose up the listing of numbers that happen there, and that we will consider as “characterizing every phrase”.

OK, so what do these characterizations appear like? Over the previous 10 years there’ve been a sequence of various methods developed (word2vec, GloVe, BERT, GPT, …), every primarily based on a unique neural internet method. However finally all of them take phrases and characterize them by lists of a whole lot to 1000’s of numbers.

Of their uncooked type, these “embedding vectors” are fairly uninformative. For instance, right here’s what GPT-2 produces because the uncooked embedding vectors for 3 particular phrases:

If we do issues like measure distances between these vectors, then we will discover issues like “nearnesses” of phrases. Later we’ll talk about in additional element what we’d take into account the “cognitive” significance of such embeddings. However for now the principle level is that we’ve got a strategy to usefully flip phrases into “neural-net-friendly” collections of numbers.

However truly we will go additional than simply characterizing phrases by collections of numbers; we will additionally do that for sequences of phrases, or certainly entire blocks of textual content. And inside ChatGPT that’s the way it’s coping with issues. It takes the textual content it’s obtained up to now, and generates an embedding vector to characterize it. Then its aim is to seek out the chances for various phrases that may happen subsequent. And it represents its reply for this as a listing of numbers that basically give the chances for every of the 50,000 or so doable phrases.

(Strictly, ChatGPT doesn’t cope with phrases, however fairly with “tokens”—handy linguistic models that could be entire phrases, or may simply be items like “pre” or “ing” or “ized”. Working with tokens makes it simpler for ChatGPT to deal with uncommon, compound and non-English phrases, and, typically, for higher or worse, to invent new phrases.)

Inside ChatGPT

OK, so we’re lastly prepared to debate what’s inside ChatGPT. And, sure, finally, it’s a large neural internet—at the moment a model of the so-called GPT-3 community with 175 billion weights. In some ways this can be a neural internet very very similar to the opposite ones we’ve mentioned. Nevertheless it’s a neural internet that’s notably arrange for coping with language. And its most notable characteristic is a chunk of neural internet structure referred to as a “transformer”.

Within the first neural nets we mentioned above, each neuron at any given layer was principally related (at the very least with some weight) to each neuron on the layer earlier than. However this type of totally related community is (presumably) overkill if one’s working with knowledge that has explicit, recognized construction. And thus, for instance, within the early levels of coping with photographs, it’s typical to make use of so-called convolutional neural nets (“convnets”) through which neurons are successfully laid out on a grid analogous to the pixels within the picture—and related solely to neurons close by on the grid.

The concept of transformers is to do one thing at the very least considerably related for sequences of tokens that make up a chunk of textual content. However as an alternative of simply defining a hard and fast area within the sequence over which there will be connections, transformers as an alternative introduce the notion of “consideration”—and the thought of “paying consideration” extra to some elements of the sequence than others. Perhaps at some point it’ll make sense to only begin a generic neural internet and do all customization via coaching. However at the very least as of now it appears to be essential in observe to “modularize” issues—as transformers do, and possibly as our brains additionally do.

OK, so what does ChatGPT (or, fairly, the GPT-3 community on which it’s primarily based) truly do? Recall that its total aim is to proceed textual content in a “affordable” manner, primarily based on what it’s seen from the coaching it’s had (which consists in taking a look at billions of pages of textual content from the online, and many others.) So at any given level, it’s obtained a specific amount of textual content—and its aim is to give you an acceptable alternative for the subsequent token so as to add.

It operates in three fundamental levels. First, it takes the sequence of tokens that corresponds to the textual content up to now, and finds an embedding (i.e. an array of numbers) that represents these. Then it operates on this embedding—in a “customary neural internet manner”, with values “rippling via” successive layers in a community—to provide a brand new embedding (i.e. a brand new array of numbers). It then takes the final a part of this array and generates from it an array of about 50,000 values that flip into possibilities for various doable subsequent tokens. (And, sure, it so occurs that there are about the identical variety of tokens used as there are frequent phrases in English, although solely about 3000 of the tokens are entire phrases, and the remainder are fragments.)

A essential level is that each a part of this pipeline is applied by a neural community, whose weights are decided by end-to-end coaching of the community. In different phrases, in impact nothing besides the general structure is “explicitly engineered”; the whole lot is simply “realized” from coaching knowledge.

There are, nevertheless, loads of particulars in the best way the structure is ready up—reflecting all types of expertise and neural internet lore. And—though that is undoubtedly going into the weeds—I believe it’s helpful to speak about a few of these particulars, not least to get a way of simply what goes into constructing one thing like ChatGPT.

First comes the embedding module. Right here’s a schematic Wolfram Language illustration for it for GPT-2:

The enter is a vector of n tokens (represented as within the earlier part by integers from 1 to about 50,000). Every of those tokens is transformed (by a single-layer neural internet) into an embedding vector (of size 768 for GPT-2 and 12,288 for ChatGPT’s GPT-3). In the meantime, there’s a “secondary pathway” that takes the sequence of (integer) positions for the tokens, and from these integers creates one other embedding vector. And at last the embedding vectors from the token worth and the token place are added collectively—to provide the ultimate sequence of embedding vectors from the embedding module.

Why does one simply add the token-value and token-position embedding vectors collectively? I don’t assume there’s any explicit science to this. It’s simply that varied various things have been tried, and that is one which appears to work. And it’s a part of the lore of neural nets that—in some sense—as long as the setup one has is “roughly proper” it’s often doable to dwelling in on particulars simply by doing adequate coaching, with out ever actually needing to “perceive at an engineering degree” fairly how the neural internet has ended up configuring itself.

Right here’s what the embedding module does, working on the string whats up whats up whats up whats up whats up whats up whats up whats up whats up whats up bye bye bye bye bye bye bye bye bye bye:

The weather of the embedding vector for every token are proven down the web page, and throughout the web page we see first a run of “whats up” embeddings, adopted by a run of “bye” ones. The second array above is the positional embedding—with its somewhat-random-looking construction being simply what “occurred to be realized” (on this case in GPT-2).

OK, so after the embedding module comes the “principal occasion” of the transformer: a sequence of so-called “consideration blocks” (12 for GPT-2, 96 for ChatGPT’s GPT-3). It’s all fairly difficult—and paying homage to typical massive hard-to-understand engineering methods, or, for that matter, organic methods. However anyway, right here’s a schematic illustration of a single “consideration block” (for GPT-2):

Inside every such consideration block there are a set of “consideration heads” (12 for GPT-2, 96 for ChatGPT’s GPT-3)—every of which operates independently on totally different chunks of values within the embedding vector. (And, sure, we don’t know any explicit motive why it’s a good suggestion to separate up the embedding vector, or what the totally different elements of it “imply”; that is simply a kind of issues that’s been “discovered to work”.)

OK, so what do the eye heads do? Principally they’re a manner of “trying again” within the sequence of tokens (i.e. within the textual content produced up to now), and “packaging up the previous” in a type that’s helpful for locating the subsequent token. Within the first part above we talked about utilizing 2-gram possibilities to select phrases primarily based on their speedy predecessors. What the “consideration” mechanism in transformers does is to permit “consideration to” even a lot earlier phrases—thus doubtlessly capturing the best way, say, verbs can confer with nouns that seem many phrases earlier than them in a sentence.

At a extra detailed degree, what an consideration head does is to recombine chunks within the embedding vectors related to totally different tokens, with sure weights. And so, for instance, the 12 consideration heads within the first consideration block (in GPT-2) have the next (“look-back-all-the-way-to-the-beginning-of-the-sequence-of-tokens”) patterns of “recombination weights” for the “whats up, bye” string above:

After being processed by the eye heads, the ensuing “re-weighted embedding vector” (of size 768 for GPT-2 and size 12,288 for ChatGPT’s GPT-3) is handed via a regular “totally related” neural internet layer. It’s exhausting to get a deal with on what this layer is doing. However right here’s a plot of the 768×768 matrix of weights it’s utilizing (right here for GPT-2):

Taking 64×64 transferring averages, some (random-walk-ish) construction begins to emerge:

What determines this construction? In the end it’s presumably some “neural internet encoding” of options of human language. However as of now, what these options could be is kind of unknown. In impact, we’re “opening up the mind of ChatGPT” (or at the very least GPT-2) and discovering, sure, it’s difficult in there, and we don’t perceive it—though ultimately it’s producing recognizable human language.

OK, so after going via one consideration block, we’ve obtained a brand new embedding vector—which is then successively handed via further consideration blocks (a complete of 12 for GPT-2; 96 for GPT-3). Every consideration block has its personal explicit sample of “consideration” and “totally related” weights. Right here for GPT-2 are the sequence of consideration weights for the “whats up, bye” enter, for the primary consideration head:

And listed below are the (moving-averaged) “matrices” for the totally related layers:

Curiously, though these “matrices of weights” in numerous consideration blocks look fairly related, the distributions of the sizes of weights will be considerably totally different (and should not at all times Gaussian):

So after going via all these consideration blocks what’s the internet impact of the transformer? Basically it’s to remodel the unique assortment of embeddings for the sequence of tokens to a closing assortment. And the actual manner ChatGPT works is then to select up the final embedding on this assortment, and “decode” it to provide a listing of possibilities for what token ought to come subsequent.

In order that’s in define what’s inside ChatGPT. It could appear difficult (not least due to its many inevitably considerably arbitrary “engineering decisions”), however truly the final word parts concerned are remarkably easy. As a result of ultimately what we’re coping with is only a neural internet made from “synthetic neurons”, every doing the straightforward operation of taking a set of numerical inputs, after which combining them with sure weights.

The unique enter to ChatGPT is an array of numbers (the embedding vectors for the tokens up to now), and what occurs when ChatGPT “runs” to provide a brand new token is simply that these numbers “ripple via” the layers of the neural internet, with every neuron “doing its factor” and passing the consequence to neurons on the subsequent layer. There’s no looping or “going again”. All the things simply “feeds ahead” via the community.

It’s a really totally different setup from a typical computational system—like a Turing machine—through which outcomes are repeatedly “reprocessed” by the identical computational parts. Right here—at the very least in producing a given token of output—every computational factor (i.e. neuron) is used solely as soon as.